Package Version Citation

1 base 4.2.2 @base

2 correlation 0.8.4 @

3 easystats 0.6.0.8 @easystats

4 emo 0.0.0.9000 @emo

5 equatiomatic 0.3.1 @equatiomatic

6 flair 0.0.2 @flair

7 GGally 2.1.2 @GGally

8 gganimate 1.0.8 @gganimate

9 ggpmisc 0.5.0 @ggpmisc

10 ggstatsplot 0.9.4 @ggstatsplot

11 huxtable 5.5.0 @huxtable

12 kableExtra 1.3.4 @kableExtra

13 knitr 1.41 @knitr2014; @knitr2015; @knitr2022

14 pacman 0.5.1 @pacman

15 papaja 0.1.1.9001 @papaja

16 parameters 0.21.1.2 @parameters

17 patchwork 1.1.2 @patchwork

18 performance 0.10.4.1 @performance

19 ppcor 1.1 @ppcor

20 pwr 1.3.0 @pwr

21 report 0.5.7.9 @report

22 rmarkdown 2.14 @rmarkdown2018; @rmarkdown2020; @rmarkdown2022

23 see 0.8.0.2 @see

24 skimr 2.1.4 @skimr

25 supernova 2.5.6 @supernova

26 tidyverse 1.3.2 @tidyverse

27 xaringan 0.26 @xaringan

28 xaringanExtra 0.7.0 @xaringanExtra

29 xaringanthemer 0.4.1 @xaringanthemerGLM I: Continuous/Numerical Predictors

Princeton University

2023-10-11

Packages

Github

Overview

Intro to GLM/Regression analysis

Why regression?

How does it work?

Drawing lines

Error and more error

Regression analysis in R

Fitting and interpreting a simple model

Sums of squares

Model fit and \(R^2\)

Testing assumptions

Purposes of Regression

Prediction

Useful if we want to forecast the future

Focus is on predicting future values of \(Y\)

Netflix trying to guess your next show

Predicting who will enroll in SNAP

Explanation

Here we want to explain relationship of variables

Focus is on the effect of \(X\) on \(Y\)

- Estimating the effect of fluency on test performance

How does regression work?

Assume \(X\) and \(Y\) are both theoretically continuous quantities

- Make a scatter plot of the relationship between \(X\) and \(Y\)

Draw a line to approximate the relationship between \(X\) and \(Y\)

And that could plausibly work for data not in the sample

Find the mathy parts of the line and then interpret the math

Lines, Math, and Regression

Components of Regression

\[ {y_i} = \beta_0 + \beta_1{X_i} +{\varepsilon_i} \]

\[ {y_i} = b_0 + b_1{X_i} + e \]

What we’ve been referring to thus far as \({Y}\)

The outcome variable, response variable, or dependent variable

The outcome is the thing we are trying to explain or predict

What we’ve been referring to thus far as \({X}\)

The explanatory variables, predictor variables, or independent variables

- Explanatory variables are things we use to explain or predict variation in \(Y\)

Drawing Lines with Math

- Remember \(y = mx + b\) from high school algebra

\[ {y_i} = \beta_0 + \beta_1{X_i} +{\varepsilon_i} \]

\[ {y_i} = b_0 + b_1{X_i} + e \]

\(y_i\) is the expected response for the \(i^{th}\) observation

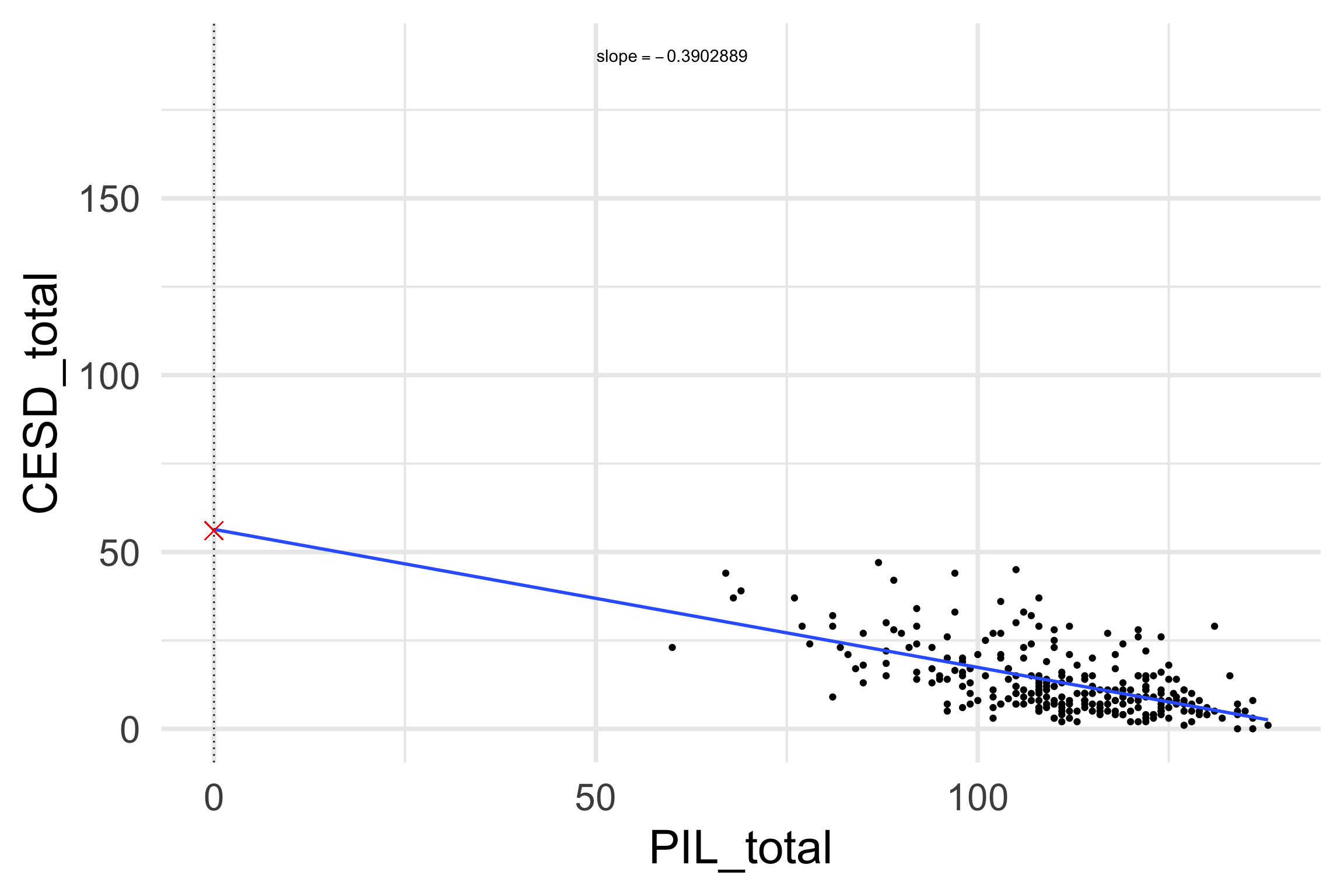

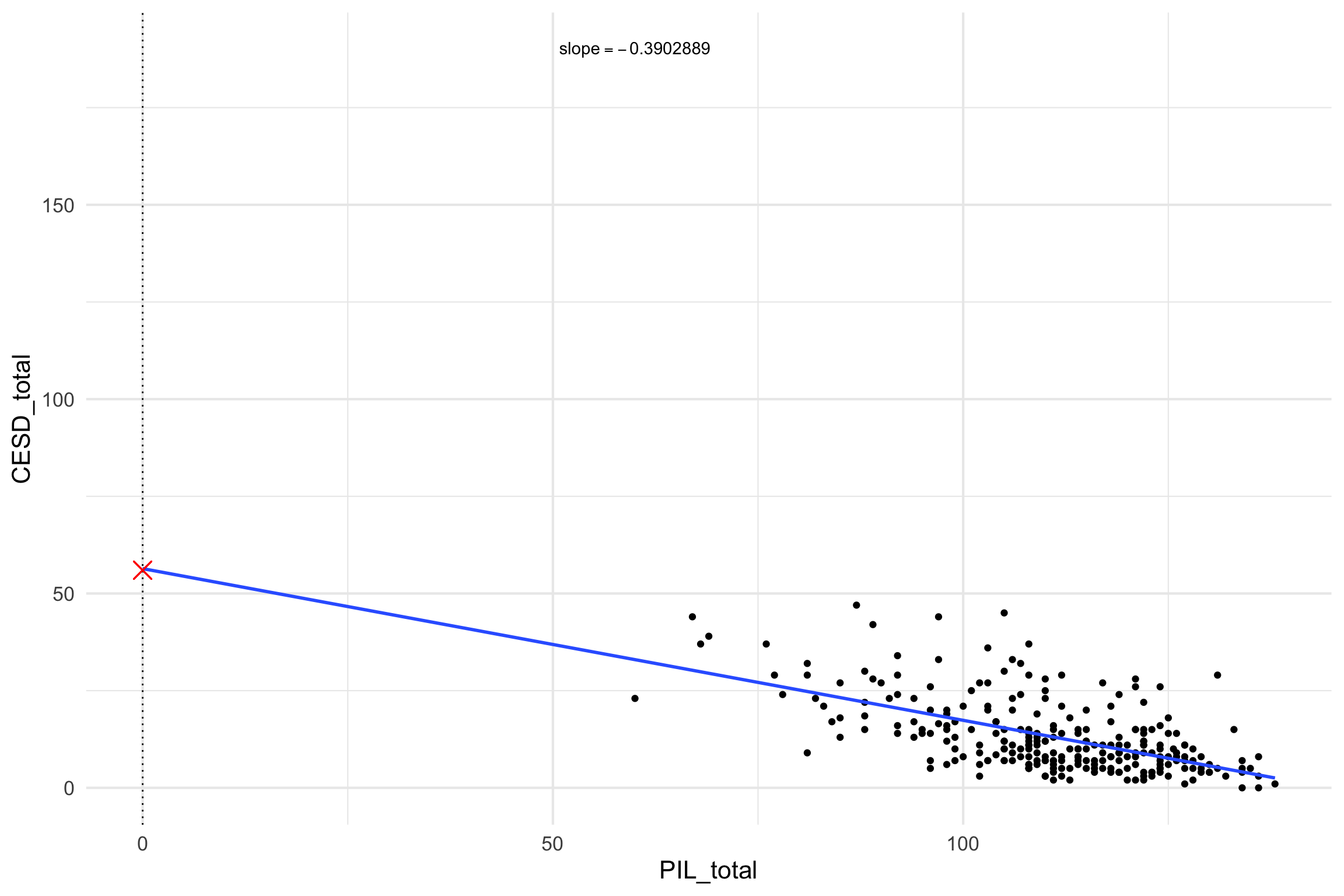

\(b_0\) is the intercept, typically the expected value of \(y\) when \(x = 0\)

\(b_1\) is the slope coefficient, the average increase in \(y\) for each one unit increase in \(x\)

\(e_i\) is a random noise term

Slopes and Intercepts

The intercept \(b_0\) captures the baseline value

- Point at which the regression line crosses the Y-axis

The slope \(b_1\) captures the rate of linear change in \(y\) as \(x\) increases

- Tells us how much we would expect y to change given a one-unit change in x

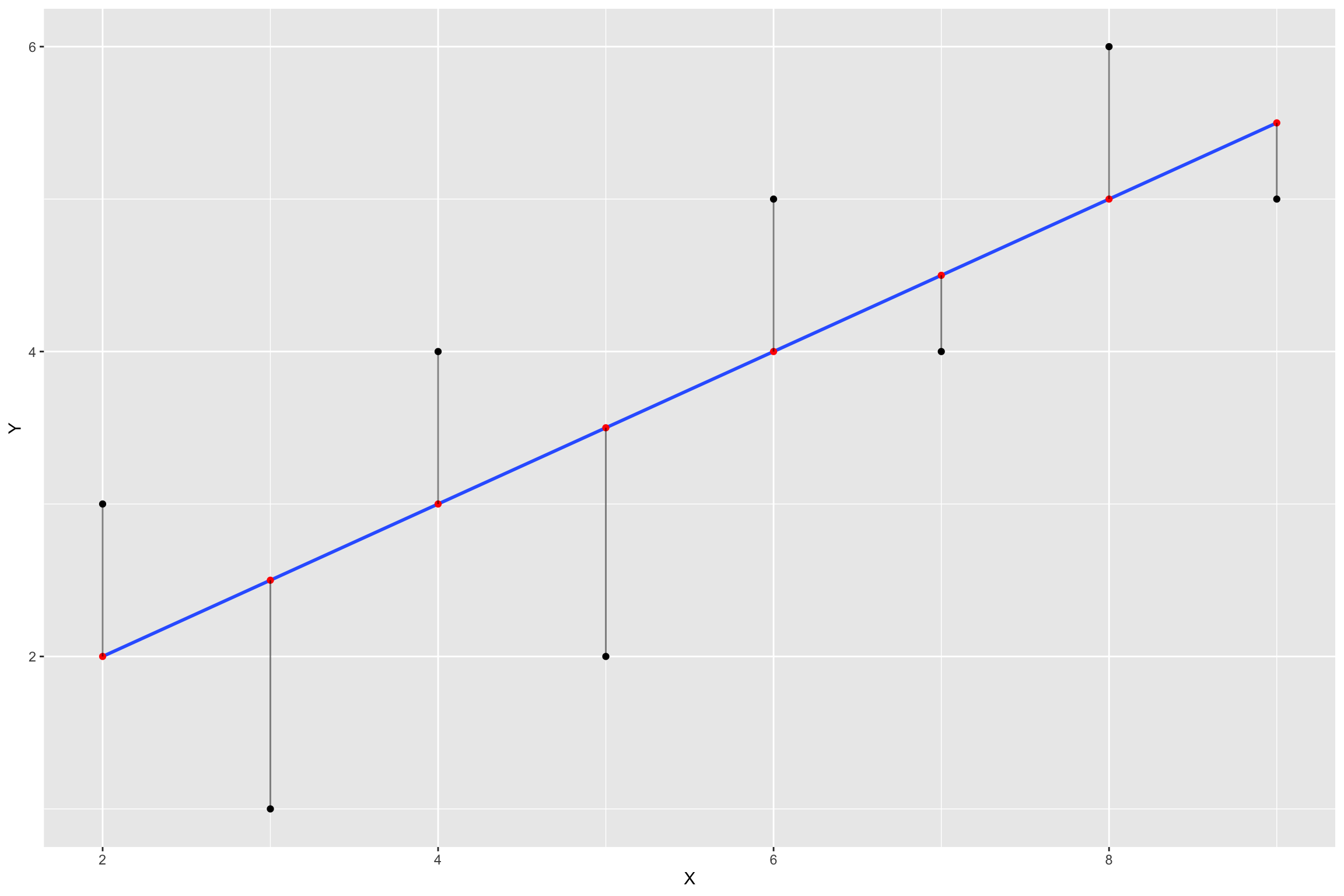

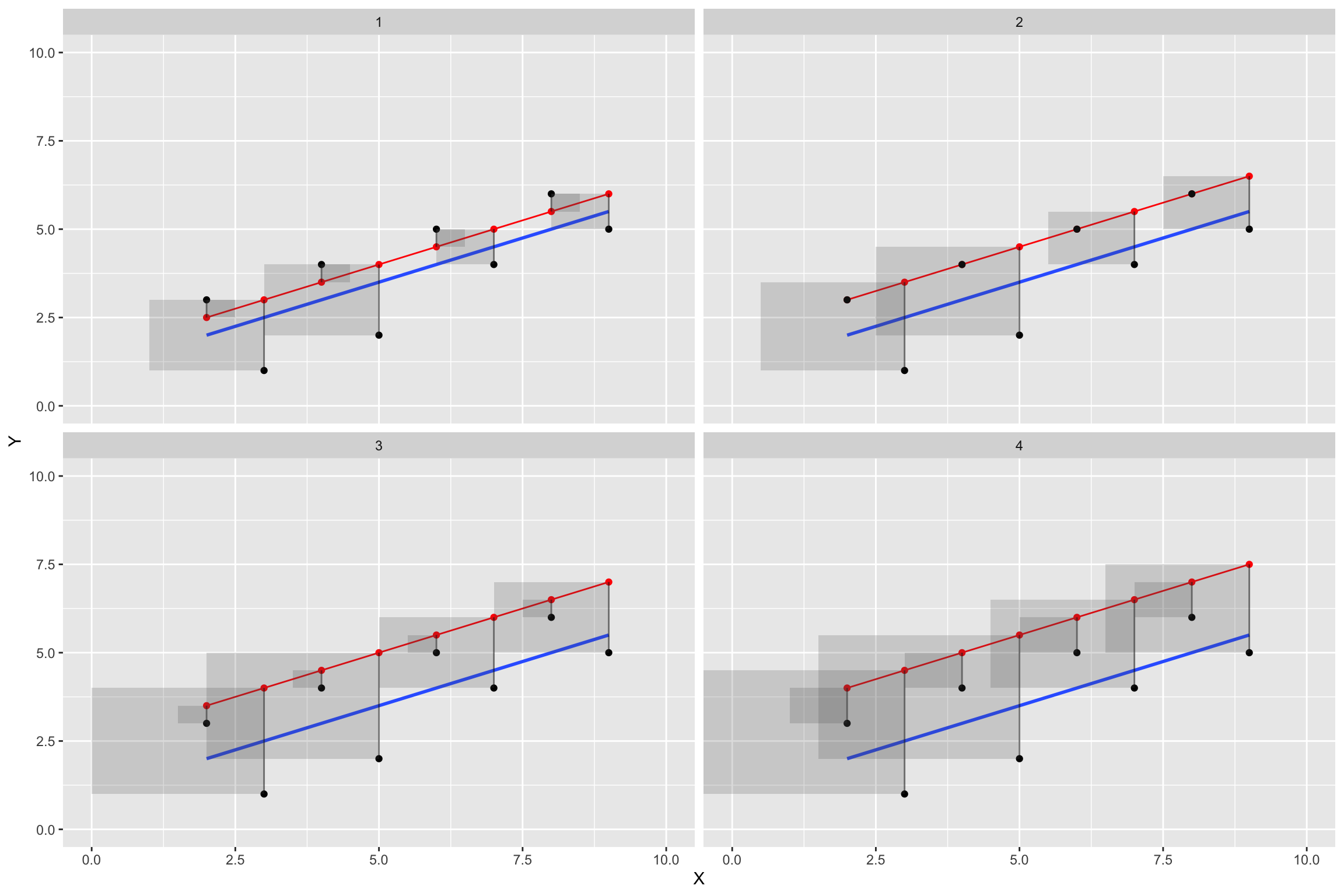

The Best Fit Line and Least Squares

Many lines could fit the data, but which is best?

- The best fitting line is one that produces the “least squares”, or minimizes the squared difference between X and Y

We use a method known as least squares to obtain estimates of \(b_0\) and \(b_1\)

Gauss-Markov and Linear Regression

The Gauss-Markov theorem states that if a series of assumptions hold, ordinary least squares is the the best linear unbiased estimator (BLUE)

Best : smallest variance

Linear : linear observed output variables

Unbiased: unbiased

Estimator

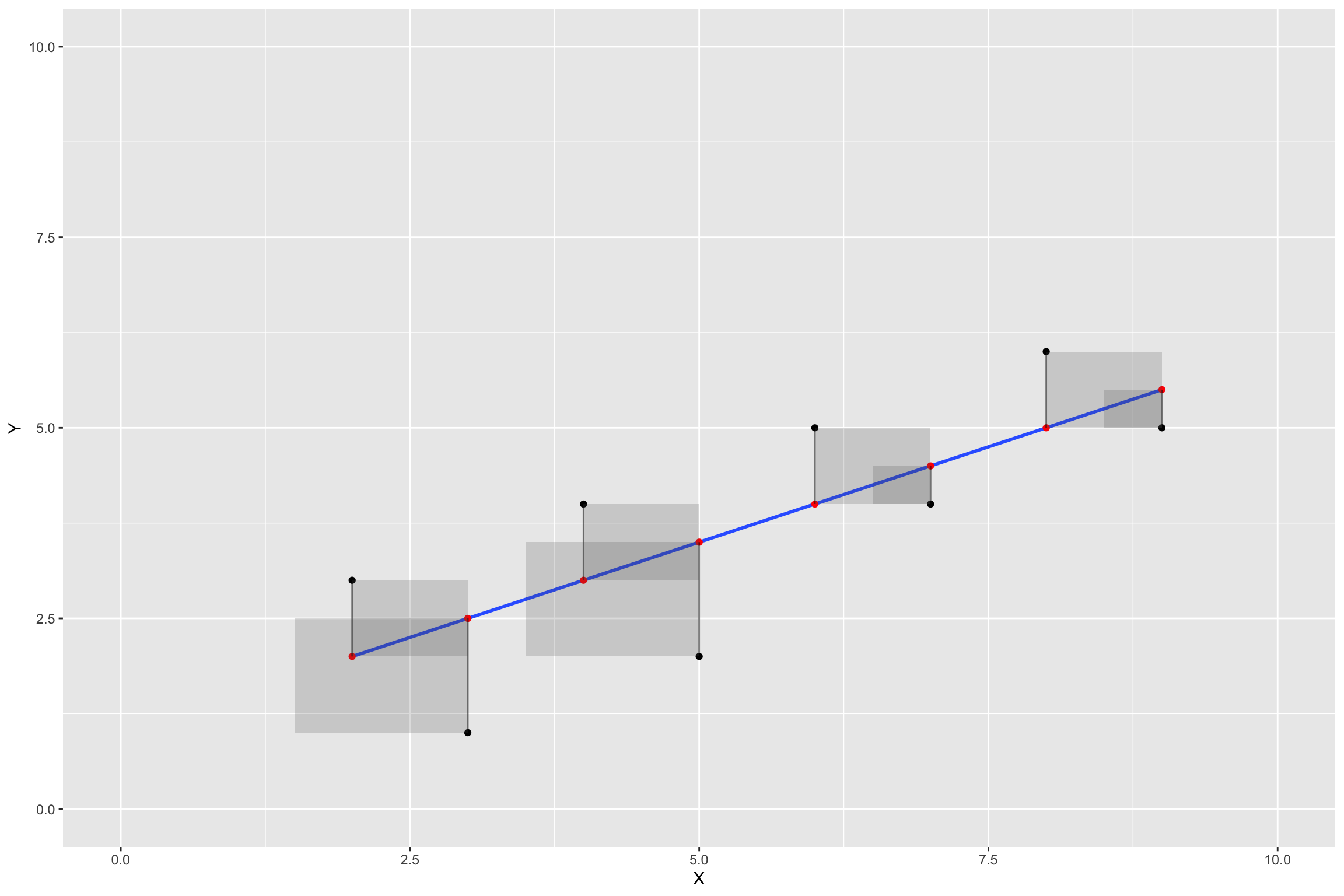

Error

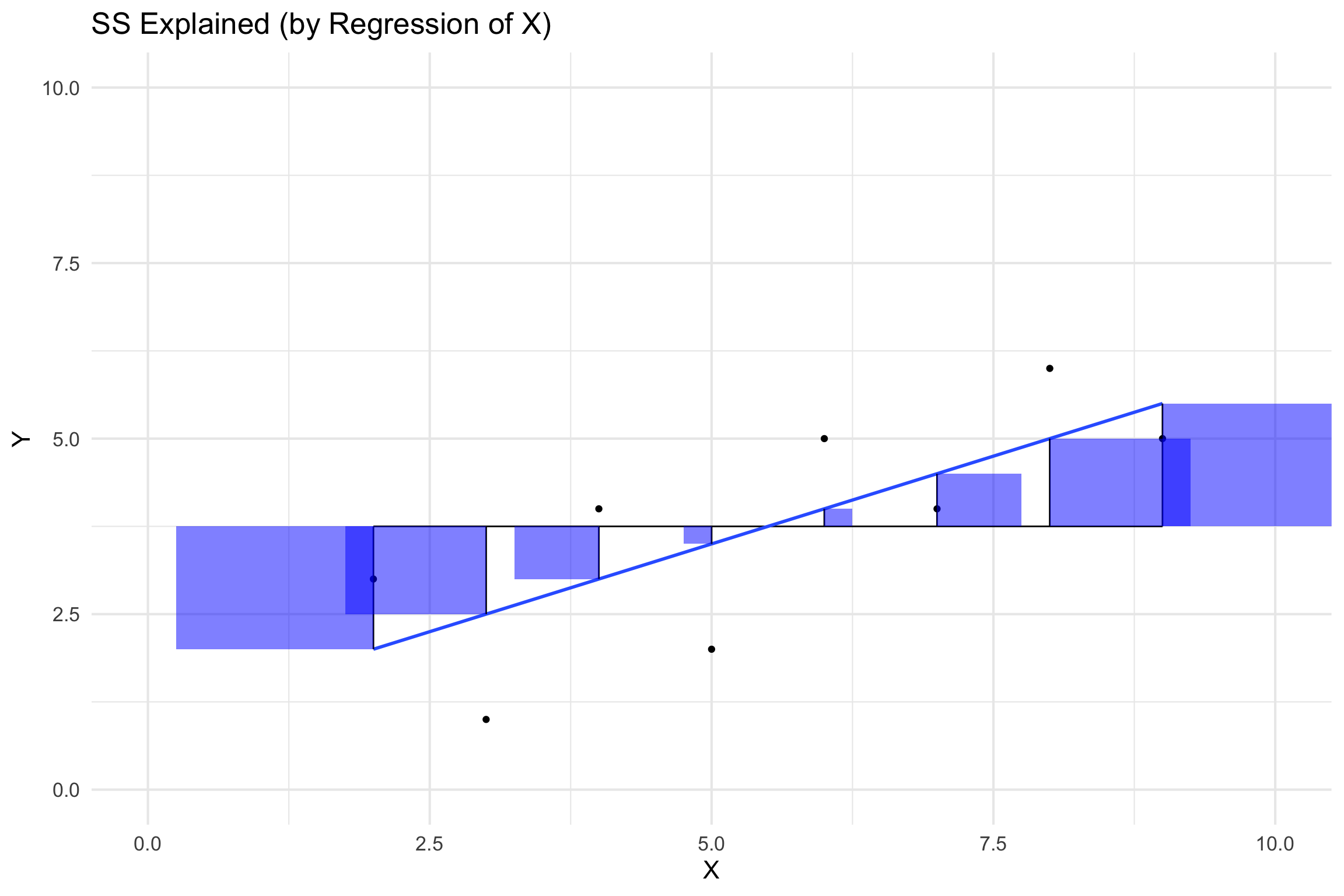

Visualize Errors as Squares

Best fit line

Shows two concepts:

Regression line is “best fit line”

The “best fit line” is the one that minimizes the sum of the squared deviations between each point and the line

Worse Fit Lines

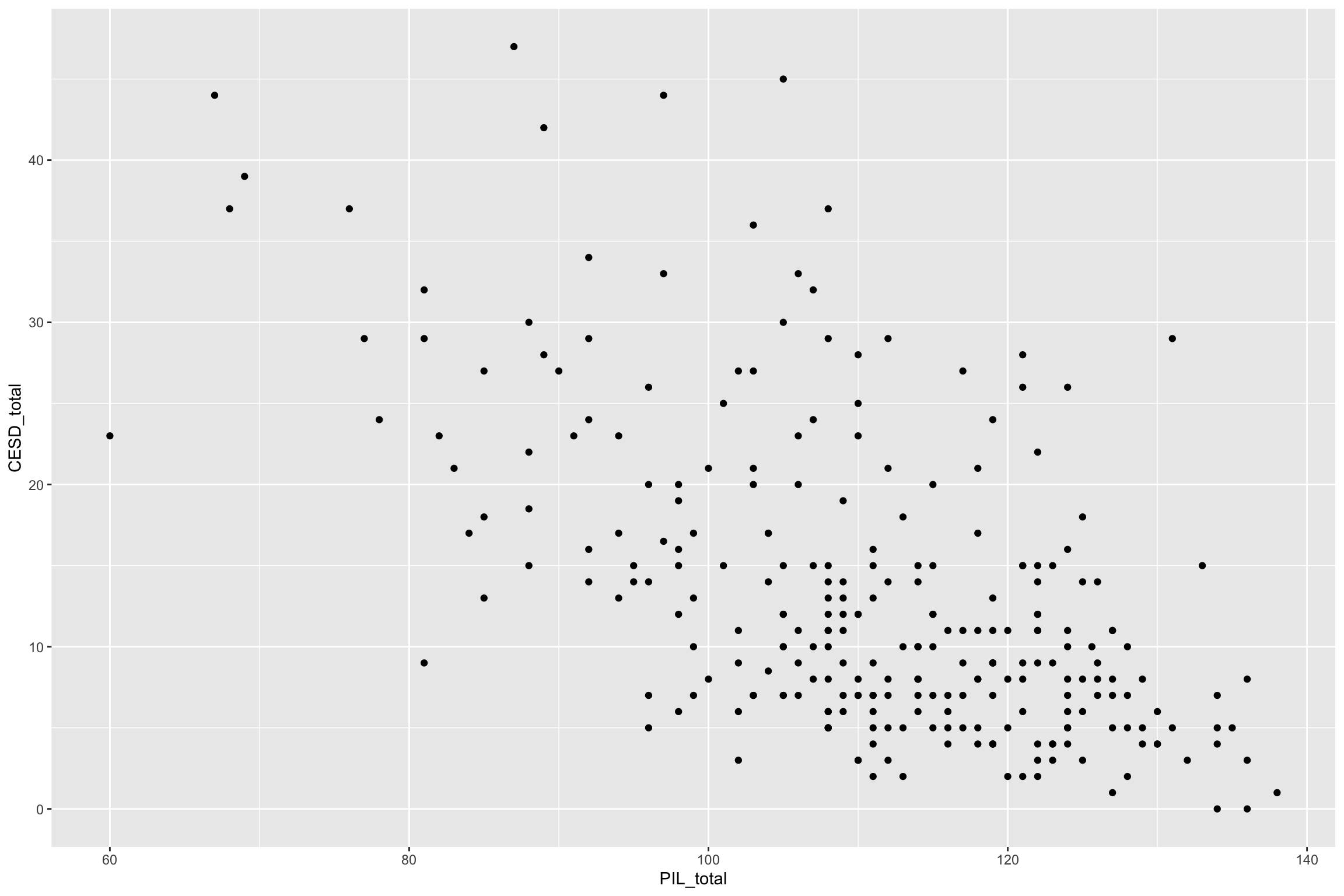

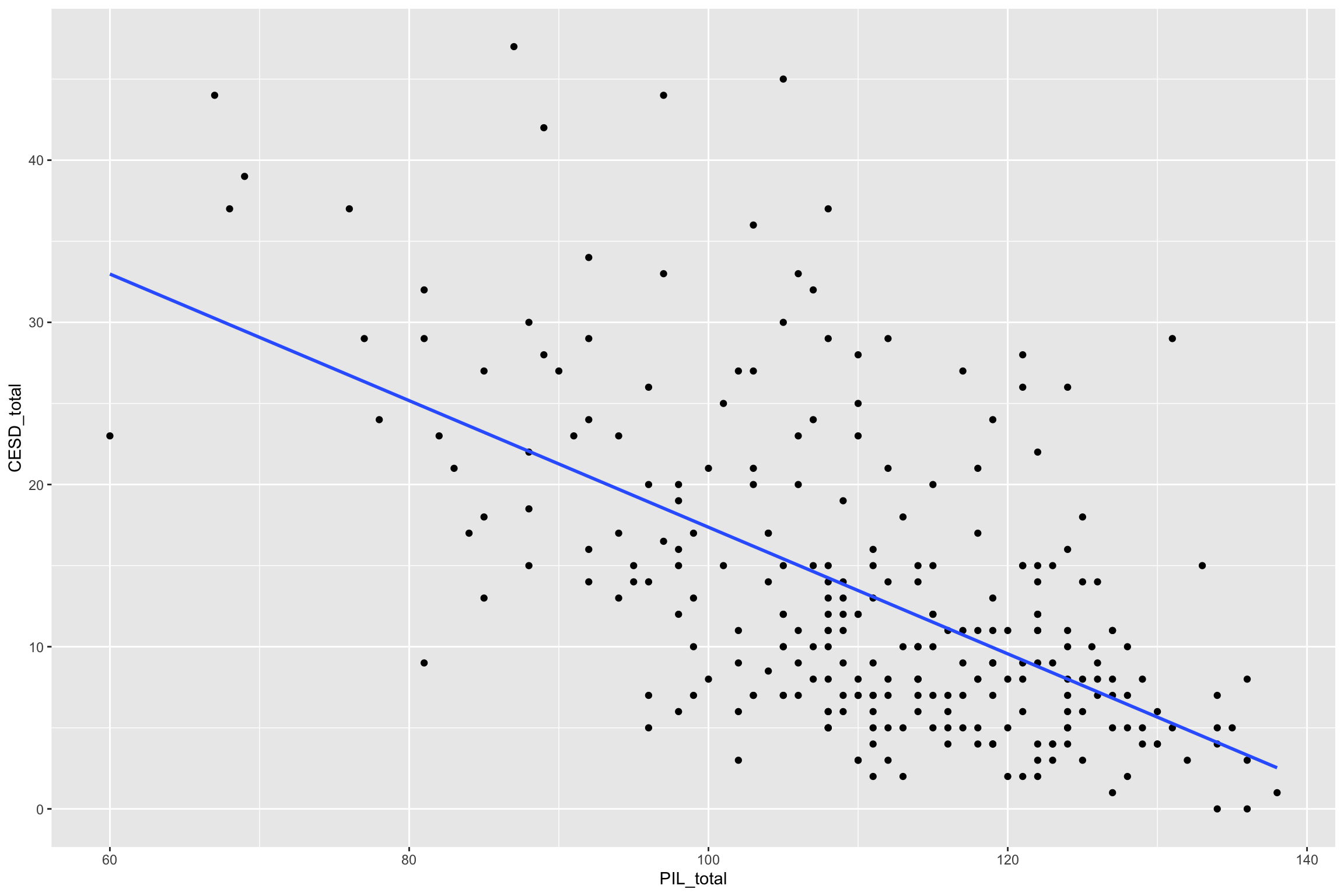

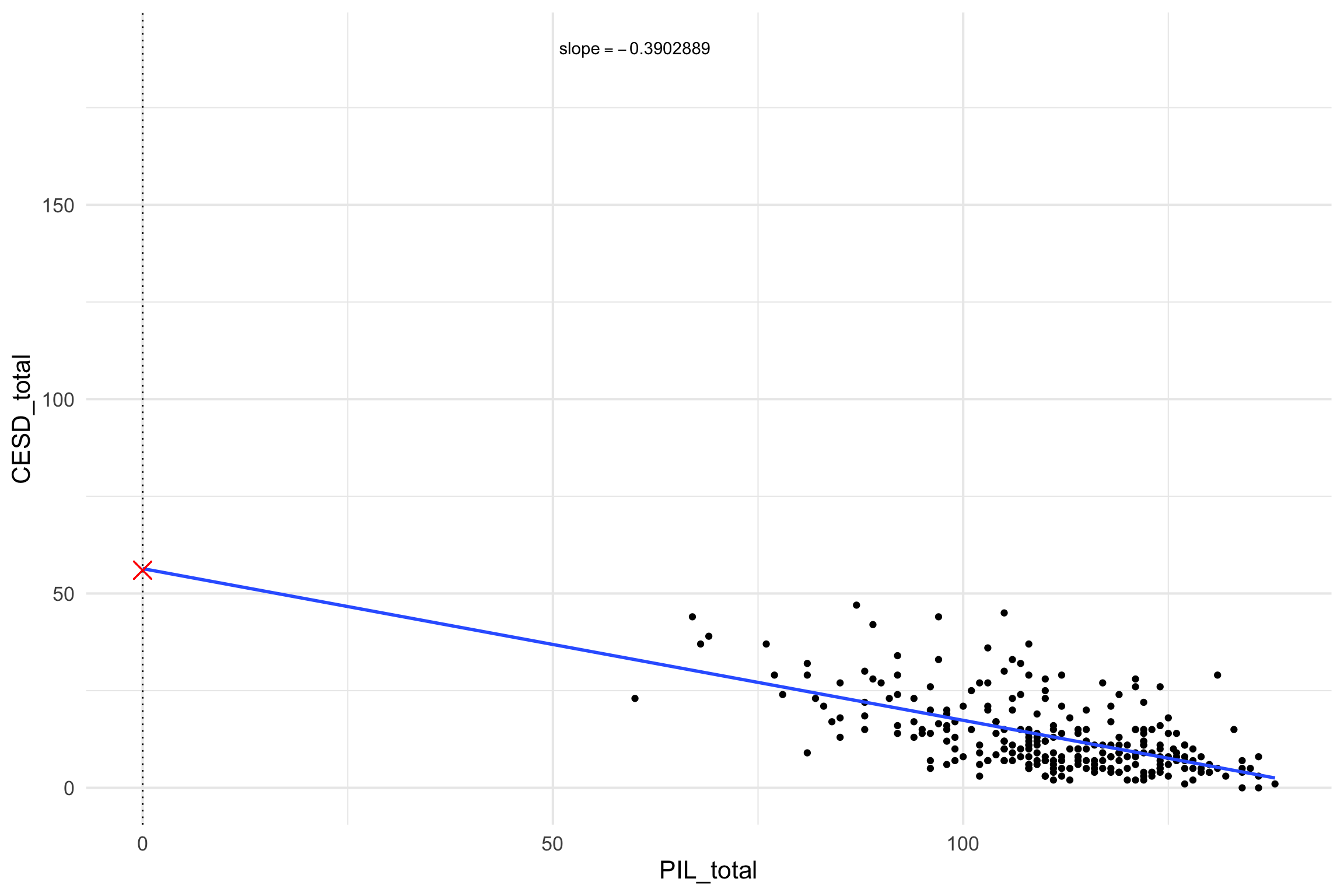

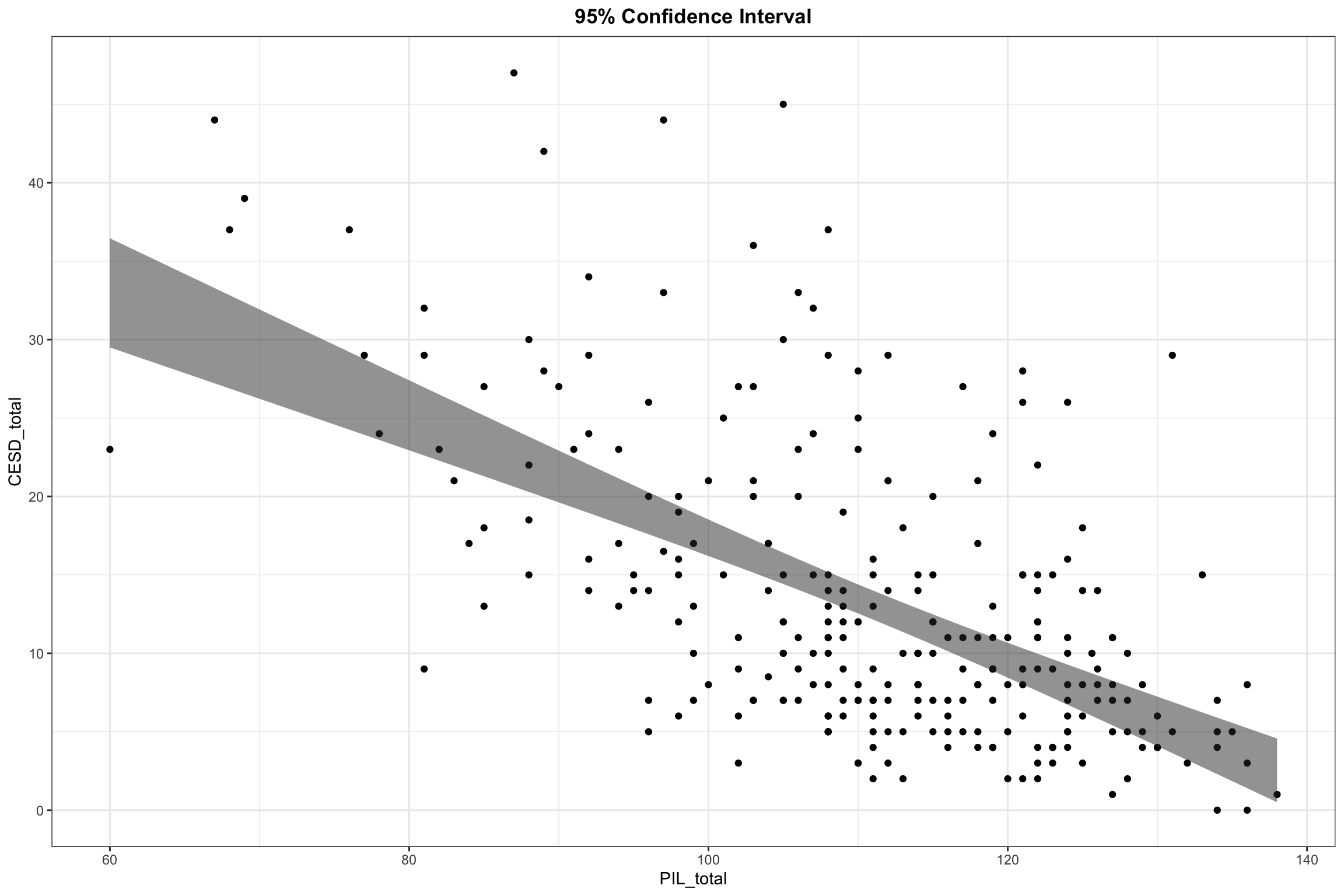

Simple Regression Example

- Depression scores and meaningfulness (in one’s life)

Simple Regression Example

lm() in R

The Relation Between Correlation and Regression

\[\hat{r} = \frac{covariance_{xy}}{s_x * s_y}\]

\[\hat{\beta_x} = \frac{\hat{r} * s_x * s_y}{s_x} = r * \frac{s_y}{s_x}\]

\[\hat{\beta_0} = \bar{y} - \hat{\beta_x}\]

lm() in R

How would we interpret \(b_0\)?

\(b_0\):

When x = 0, the b_0 = 56.39# A tibble: 2 × 5 term estimate std.error statistic p.value <chr> <dbl> <dbl> <dbl> <dbl> 1 (Intercept) 56.4 3.75 15.0 2.43e-37 2 PIL_total -0.390 0.0336 -11.6 1.95e-25

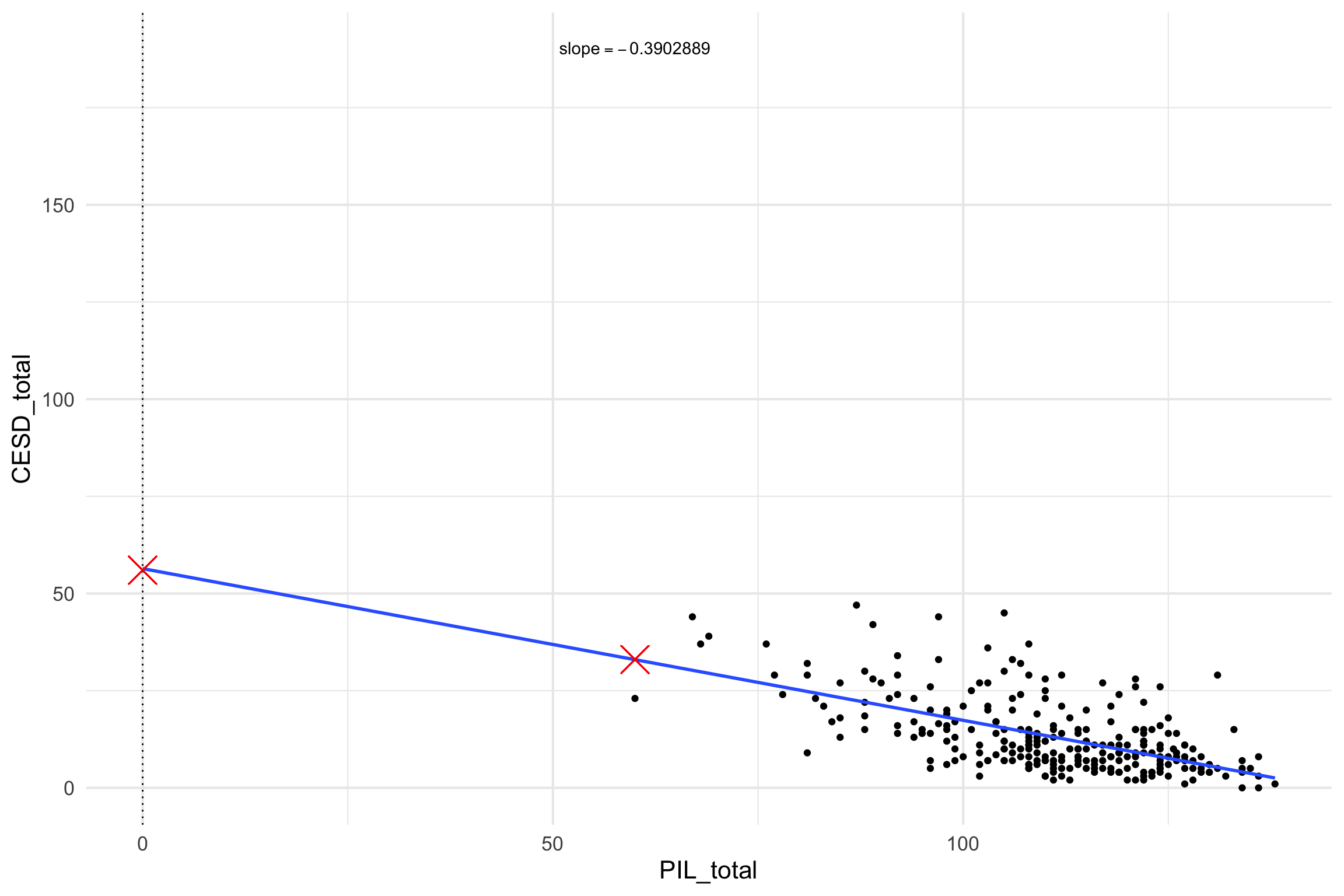

lm() in R

How would we interpret \(b_1\)?

\(b_1\):

for one-unit increase in meaning, there is a -0.39 decrease in depression scores# A tibble: 2 × 5 term estimate std.error statistic p.value <chr> <dbl> <dbl> <dbl> <dbl> 1 (Intercept) 56.4 3.75 15.0 2.43e-37 2 PIL_total -0.390 0.0336 -11.6 1.95e-25

lm() in R

\[\hat{CESD_{total}} = 56 + (-.39)*PIL_{total}\]

lm() in R

\[ \hat{CESD_{total}} = 56 + (-.39)*60\]

Predictions

predict(object, newdata)object = model

newdata = values to predict

Residuals, Fitted Values, and Model Fit

- If we want to make inferences about the regression parameter estimates, then we also need an estimate of their variability

- We also need to know how well are data fits the linear model

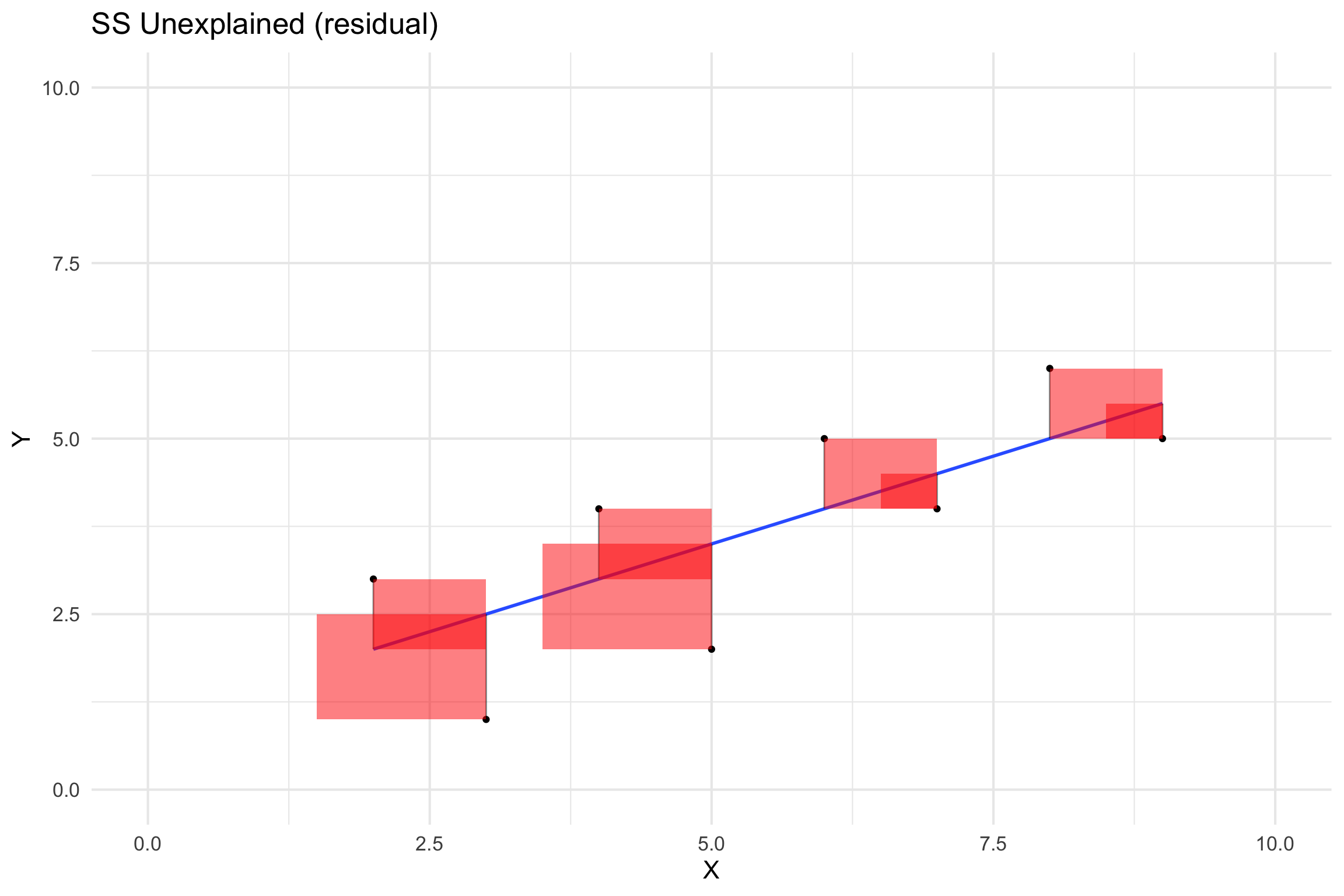

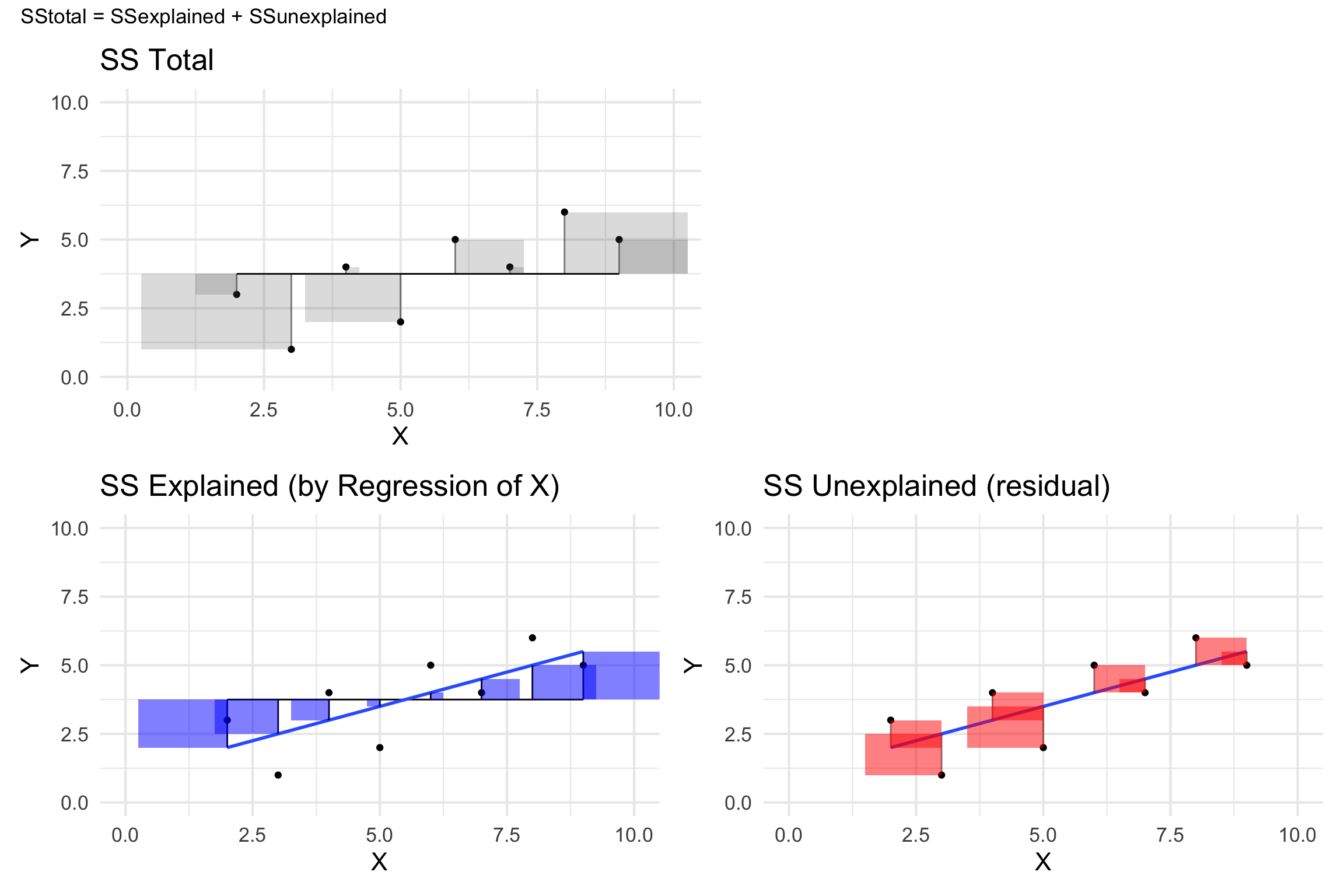

SS Unexplained (Sums of Squares Error)

\[residual = y - \hat{y} = y - (x*\hat{\beta_x} + \hat{\beta_0})\]

\[SS_{error} = \sum_{i=1}^n{(y_i - \hat{y_i})^2} = \sum_{i=1}^n{residuals^2}\]

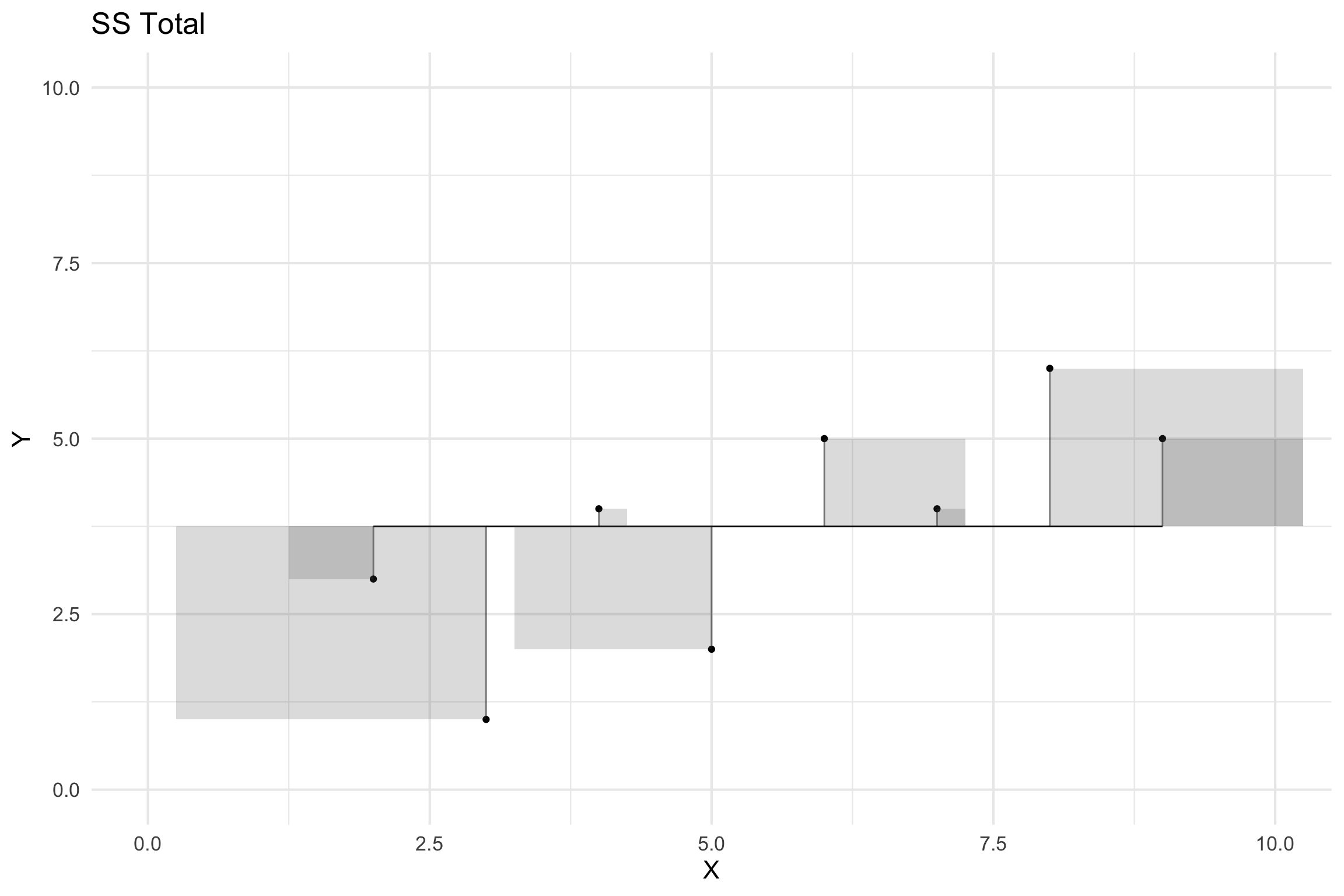

SS Total (Sums of Squares Total)

Squared differences between the observed dependent variable and its mean.

\[SS_{total} = \sum{(y_i - \bar{y})^2}\]

SS Explained (Sums of Squares Regression)

The sum of the differences between the predicted value and the mean of the dependent variable

\[SS_{Explained} = \sum (\hat{y_i} - \bar{y})^2\]

All Together

broom Regression

- tidy(): coefficient table

- glance(): model summary

- augment(): adds information about each observation

Regression: NHST

\[H_0\colon \ \beta_1=0\] \[H_1\colon \ \beta_1\ne0\]

\[\begin{array}{c} t_{N - p} = \frac{\hat{\beta} - \beta_{expected}}{SE_{\hat{\beta}}}\\ t_{N - p} = \frac{\hat{\beta} - 0}{SE_{\hat{\beta}}}\\ t_{N - p} = \frac{\hat{\beta} }{SE_{\hat{\beta}}} \end{array}\]

# A tibble: 2 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 56.4 3.75 15.0 2.43e-37

2 PIL_total -0.390 0.0336 -11.6 1.95e-25[1] 1.96899Calculate Standard Error

\[MS_{error} = \frac{SS_{error}}{df} = \frac{\sum_{i=1}^n{(y_i - \hat{y_i})^2} }{N - p}\]

\[ SE_{model} = \sqrt{MS_{error}} \]

\[SE_{\hat{\beta}_x} = \frac{SE_{model}}{\sqrt{{\sum{(x_i - \bar{x})^2}}}}\]

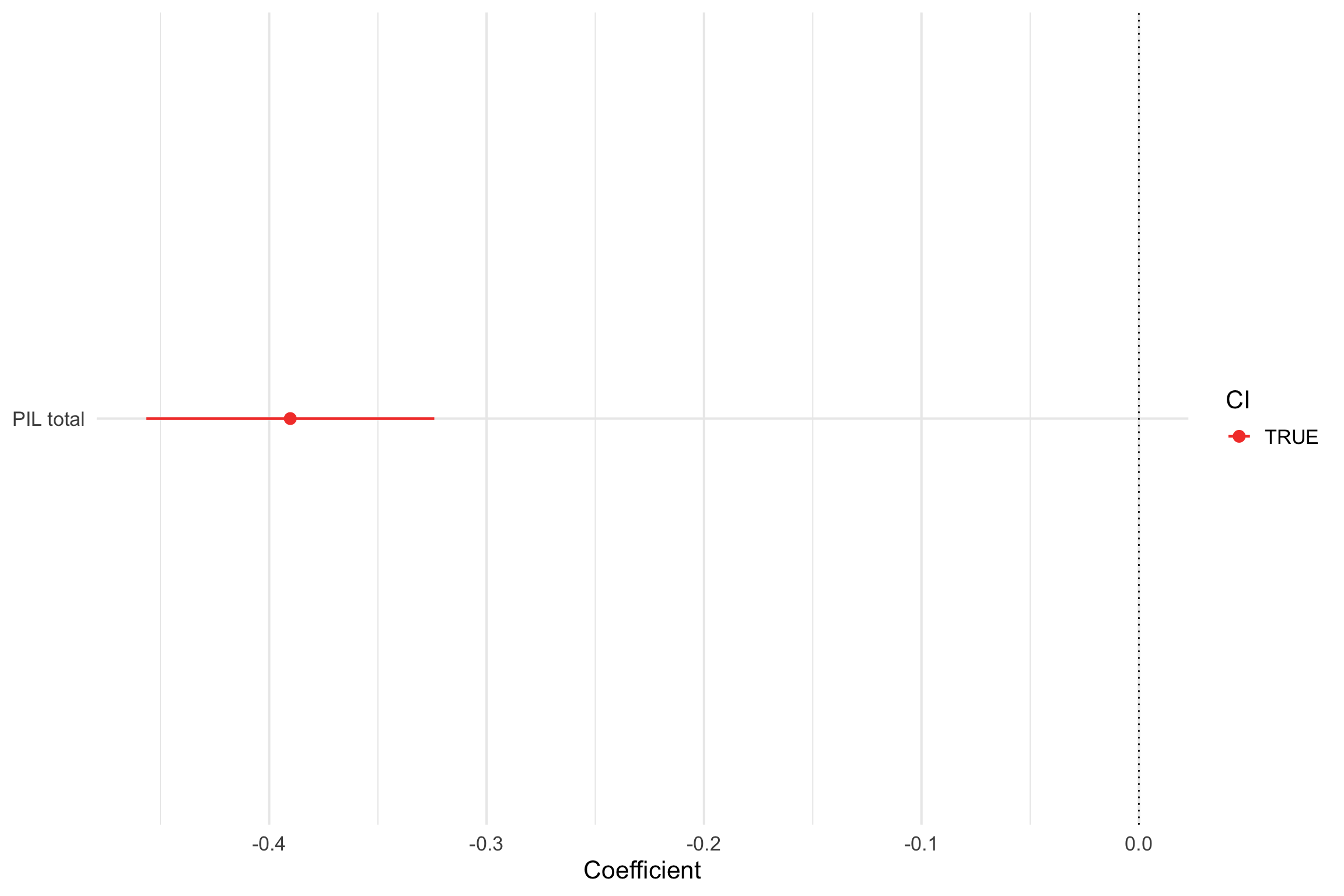

95% CIs

\[b_1 \pm t^\ast (SE_{b_1})\]

| term | estimate | std.error | statistic | p.value | conf.low | conf.high |

|---|---|---|---|---|---|---|

| (Intercept) | 56.3955372 | 3.7525824 | 15.02846 | 0 | 49.0068665 | 63.7842079 |

| PIL_total | -0.3902889 | 0.0336426 | -11.60104 | 0 | -0.4565296 | -0.3240481 |

Getting Residuals and Predicted Values

| CESD_total | PIL_total | .fitted | .resid | .hat | .sigma | .cooksd | .std.resid |

|---|---|---|---|---|---|---|---|

| 28 | 121 | 9.170584 | 18.829416 | 0.0057970 | 7.602150 | 0.0176455 | 2.4601796 |

| 37 | 76 | 26.733583 | 10.266417 | 0.0268288 | 7.663762 | 0.0253376 | 1.3557879 |

| 20 | 98 | 18.147228 | 1.852772 | 0.0068267 | 7.689629 | 0.0002016 | 0.2422016 |

| 15 | 122 | 8.780295 | 6.219705 | 0.0062133 | 7.680888 | 0.0020653 | 0.8128131 |

| 7 | 99 | 17.756939 | -10.756939 | 0.0063593 | 7.661748 | 0.0063246 | -1.4058582 |

| 7 | 134 | 4.096829 | 2.903171 | 0.0142050 | 7.688375 | 0.0010455 | 0.3809315 |

Model Fit

Fitted line with 95% CIs

# get cis for fitted values

model1 %>%

augment(se_fit = TRUE, interval = "confidence") %>%

knitr::kable()| CESD_total | PIL_total | .fitted | .lower | .upper | .se.fit | .resid | .hat | .sigma | .cooksd | .std.resid |

|---|---|---|---|---|---|---|---|---|---|---|

| 28.0 | 121.0000 | 9.170584 | 8.0198612 | 10.321307 | 0.5844329 | 18.8294160 | 0.0057970 | 7.602150 | 0.0176455 | 2.4601796 |

| 37.0 | 76.0000 | 26.733583 | 24.2580456 | 29.209121 | 1.2572841 | 10.2664169 | 0.0268288 | 7.663762 | 0.0253376 | 1.3557879 |

| 20.0 | 98.0000 | 18.147228 | 16.8984852 | 19.395971 | 0.6342156 | 1.8527720 | 0.0068267 | 7.689629 | 0.0002016 | 0.2422016 |

| 15.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | 6.2197049 | 0.0062133 | 7.680888 | 0.0020653 | 0.8128131 |

| 7.0 | 99.0000 | 17.756939 | 16.5517004 | 18.962178 | 0.6121206 | -10.7569391 | 0.0063593 | 7.661748 | 0.0063246 | -1.4058582 |

| 7.0 | 134.0000 | 4.096829 | 2.2955143 | 5.898143 | 0.9148575 | 2.9031713 | 0.0142050 | 7.688375 | 0.0010455 | 0.3809315 |

| 27.0 | 102.0000 | 16.586073 | 15.4975049 | 17.674640 | 0.5528653 | 10.4139275 | 0.0051877 | 7.663586 | 0.0048242 | 1.3602272 |

| 10.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | 2.0002826 | 0.0071610 | 7.689488 | 0.0002467 | 0.2615288 |

| 9.0 | 126.0000 | 7.219140 | 5.8453381 | 8.592941 | 0.6977308 | 1.7808604 | 0.0082625 | 7.689693 | 0.0002261 | 0.2329695 |

| 8.0 | 112.0000 | 12.683184 | 11.7540290 | 13.612339 | 0.4719022 | -4.6831838 | 0.0037795 | 7.685057 | 0.0007088 | -0.6112670 |

| 3.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | -10.4637615 | 0.0037538 | 7.663367 | 0.0035142 | -1.3657524 |

| 7.0 | 105.0000 | 15.415206 | 14.4170377 | 16.413374 | 0.5069529 | -8.4152059 | 0.0043618 | 7.672944 | 0.0026442 | -1.0987059 |

| 15.0 | 107.0000 | 14.634628 | 13.6783551 | 15.590901 | 0.4856751 | 0.3653718 | 0.0040034 | 7.690448 | 0.0000046 | 0.0476951 |

| 12.0 | 98.0000 | 18.147228 | 16.8984852 | 19.395971 | 0.6342156 | -6.1472280 | 0.0068267 | 7.681105 | 0.0022193 | -0.8035896 |

| 5.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | -2.9997174 | 0.0071610 | 7.688248 | 0.0005547 | -0.3922008 |

| 18.5 | 88.0000 | 22.050117 | 20.2867099 | 23.813523 | 0.8956048 | -3.5501167 | 0.0136134 | 7.687333 | 0.0014965 | -0.4656789 |

| 7.0 | 103.0000 | 16.195784 | 15.1406420 | 17.250925 | 0.5358888 | -9.1957836 | 0.0048740 | 7.669525 | 0.0035319 | -1.2009286 |

| 7.0 | 116.0000 | 11.122028 | 10.1318833 | 12.112173 | 0.5028781 | -4.1220283 | 0.0042920 | 7.686277 | 0.0006242 | -0.5381613 |

| 7.0 | 128.0000 | 6.438562 | 4.9641036 | 7.913020 | 0.7488527 | 0.5614381 | 0.0095176 | 7.690402 | 0.0000260 | 0.0734930 |

| 9.0 | 109.0000 | 13.854050 | 12.9225573 | 14.785543 | 0.4730898 | -4.8540504 | 0.0037986 | 7.684654 | 0.0007653 | -0.6335752 |

| 11.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -3.2443393 | 0.0038818 | 7.687878 | 0.0003494 | -0.4234853 |

| 11.0 | 109.0000 | 13.854050 | 12.9225573 | 14.785543 | 0.4730898 | -2.8540504 | 0.0037986 | 7.688467 | 0.0002646 | -0.3725251 |

| 18.0 | 85.0000 | 23.220983 | 21.2855757 | 25.156391 | 0.9829612 | -5.2209833 | 0.0163986 | 7.683653 | 0.0039208 | -0.6858201 |

| 0.0 | 136.0000 | 3.316251 | 1.4000461 | 5.232456 | 0.9732084 | -3.3162509 | 0.0160748 | 7.687728 | 0.0015496 | -0.4355458 |

| 27.0 | 90.0000 | 21.269539 | 19.6174647 | 22.921613 | 0.8390609 | 5.7304611 | 0.0119487 | 7.682291 | 0.0034107 | 0.7510473 |

| 23.0 | 94.0000 | 19.708384 | 18.2681930 | 21.148574 | 0.7314487 | 3.2916165 | 0.0090804 | 7.687787 | 0.0008503 | 0.4307820 |

| 3.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | -10.4637615 | 0.0037538 | 7.663367 | 0.0035142 | -1.3657524 |

| 8.5 | 104.0000 | 15.805495 | 14.7805831 | 16.830406 | 0.5205355 | -7.3054948 | 0.0045987 | 7.677265 | 0.0021021 | -0.9539333 |

| 14.0 | 104.0000 | 15.805495 | 14.7805831 | 16.830406 | 0.5205355 | -1.8054948 | 0.0045987 | 7.689674 | 0.0001284 | -0.2357570 |

| 5.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -9.2443393 | 0.0038818 | 7.669324 | 0.0028370 | -1.2066683 |

| 7.0 | 106.0000 | 15.024917 | 14.0497190 | 16.000115 | 0.4952868 | -8.0249170 | 0.0041634 | 7.674538 | 0.0022943 | -1.0476446 |

| 8.0 | 118.0000 | 10.341451 | 9.2966681 | 11.386233 | 0.5306276 | -2.3414506 | 0.0047788 | 7.689124 | 0.0002245 | -0.3057684 |

| 10.0 | 113.0000 | 12.292895 | 11.3551157 | 13.230674 | 0.4762824 | -2.2928949 | 0.0038500 | 7.689181 | 0.0001731 | -0.2992880 |

| 9.0 | 81.0000 | 24.782139 | 22.6102899 | 26.953988 | 1.1030457 | -15.7821388 | 0.0206501 | 7.627590 | 0.0455075 | -2.0776118 |

| 5.0 | 96.0000 | 18.927806 | 17.5864623 | 20.269149 | 0.6812459 | -13.9278057 | 0.0078767 | 7.642177 | 0.0131729 | -1.8216601 |

| 24.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | 14.0488383 | 0.0050798 | 7.641470 | 0.0085951 | 1.8349058 |

| 10.0 | 107.0000 | 14.634628 | 13.6783551 | 15.590901 | 0.4856751 | -4.6346282 | 0.0040034 | 7.685168 | 0.0007356 | -0.6049973 |

| 21.0 | 112.0000 | 12.683184 | 11.7540290 | 13.612339 | 0.4719022 | 8.3168162 | 0.0037795 | 7.673363 | 0.0022354 | 1.0855426 |

| 14.0 | 95.0000 | 19.318095 | 17.9280271 | 20.708162 | 0.7059920 | -5.3180946 | 0.0084593 | 7.683453 | 0.0020651 | -0.6957741 |

| 4.0 | 116.0000 | 11.122028 | 10.1318833 | 12.112173 | 0.5028781 | -7.1220283 | 0.0042920 | 7.677925 | 0.0018634 | -0.9298335 |

| 17.0 | 84.0000 | 23.611272 | 21.6174266 | 25.605118 | 1.0126409 | -6.6112721 | 0.0174039 | 7.679518 | 0.0066861 | -0.8688904 |

| 6.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | -5.9026061 | 0.0039589 | 7.681862 | 0.0011798 | -0.7705000 |

| 6.0 | 98.0000 | 18.147228 | 16.8984852 | 19.395971 | 0.6342156 | -12.1472280 | 0.0068267 | 7.653805 | 0.0086660 | -1.5879329 |

| 5.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -9.2443393 | 0.0038818 | 7.669324 | 0.0028370 | -1.2066683 |

| 11.0 | 127.0000 | 6.828851 | 5.4053723 | 8.252329 | 0.7229610 | 4.1711492 | 0.0088708 | 7.686156 | 0.0013333 | 0.5458309 |

| 7.0 | 96.0000 | 18.927806 | 17.5864623 | 20.269149 | 0.6812459 | -11.9278057 | 0.0078767 | 7.655083 | 0.0096613 | -1.5600740 |

| 17.0 | 104.0000 | 15.805495 | 14.7805831 | 16.830406 | 0.5205355 | 1.1945052 | 0.0045987 | 7.690128 | 0.0000562 | 0.1559755 |

| 7.0 | 112.0000 | 12.683184 | 11.7540290 | 13.612339 | 0.4719022 | -5.6831838 | 0.0037795 | 7.682492 | 0.0010438 | -0.7417908 |

| 11.0 | 106.0000 | 15.024917 | 14.0497190 | 16.000115 | 0.4952868 | -4.0249170 | 0.0041634 | 7.686473 | 0.0005772 | -0.5254488 |

| 37.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | 22.7556607 | 0.0038818 | 7.561376 | 0.0171907 | 2.9703081 |

| 30.0 | 88.0000 | 22.050117 | 20.2867099 | 23.813523 | 0.8956048 | 7.9498833 | 0.0136134 | 7.674685 | 0.0075041 | 1.0428088 |

| 20.0 | 115.0000 | 11.512317 | 10.5438343 | 12.480800 | 0.4918763 | 8.4876828 | 0.0041063 | 7.672645 | 0.0025311 | 1.1080264 |

| 8.0 | 118.0000 | 10.341451 | 9.2966681 | 11.386233 | 0.5306276 | -2.3414506 | 0.0047788 | 7.689124 | 0.0002245 | -0.3057684 |

| 16.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | 2.9265273 | 0.0037475 | 7.688363 | 0.0002744 | 0.3819754 |

| 6.0 | 102.0000 | 16.586073 | 15.4975049 | 17.674640 | 0.5528653 | -10.5860725 | 0.0051877 | 7.662688 | 0.0049850 | -1.3827121 |

| 10.0 | 105.0000 | 15.415206 | 14.4170377 | 16.413374 | 0.5069529 | -5.4152059 | 0.0043618 | 7.683224 | 0.0010950 | -0.7070200 |

| 6.0 | 109.0000 | 13.854050 | 12.9225573 | 14.785543 | 0.4730898 | -7.8540504 | 0.0037986 | 7.675216 | 0.0020036 | -1.0251504 |

| 7.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | -6.0734727 | 0.0037475 | 7.681357 | 0.0011819 | -0.7927201 |

| 7.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | -6.4637615 | 0.0037538 | 7.680146 | 0.0013410 | -0.8436639 |

| 15.0 | 88.0000 | 22.050117 | 20.2867099 | 23.813523 | 0.8956048 | -7.0501167 | 0.0136134 | 7.678061 | 0.0059016 | -0.9247838 |

| 15.0 | 95.0000 | 19.318095 | 17.9280271 | 20.708162 | 0.7059920 | -4.3180946 | 0.0084593 | 7.685848 | 0.0013615 | -0.5649427 |

| 14.0 | 92.0000 | 20.488961 | 18.9448765 | 22.033046 | 0.7842148 | -6.4889612 | 0.0104377 | 7.679995 | 0.0038087 | -0.8498085 |

| 9.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | -4.0734727 | 0.0037475 | 7.686378 | 0.0005317 | -0.5316766 |

| 12.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | -1.4637615 | 0.0037538 | 7.689951 | 0.0000688 | -0.1910533 |

| 8.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | -3.9026061 | 0.0039589 | 7.686714 | 0.0005157 | -0.5094289 |

| 44.0 | 67.0000 | 30.246183 | 27.2094744 | 33.282891 | 1.5422935 | 13.7538171 | 0.0403710 | 7.641780 | 0.0703742 | 1.8291067 |

| 36.0 | 103.0000 | 16.195784 | 15.1406420 | 17.250925 | 0.5358888 | 19.8042164 | 0.0048740 | 7.592798 | 0.0163813 | 2.5863429 |

| 30.0 | 105.0000 | 15.415206 | 14.4170377 | 16.413374 | 0.5069529 | 14.5847941 | 0.0043618 | 7.637684 | 0.0079428 | 1.9042196 |

| 39.0 | 69.0000 | 29.465605 | 26.5548035 | 32.376407 | 1.4783475 | 9.5343948 | 0.0370927 | 7.667196 | 0.0308610 | 1.2658096 |

| 26.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | 18.0002826 | 0.0071610 | 7.609687 | 0.0199748 | 2.3534632 |

| 37.0 | 68.0000 | 29.855894 | 26.8822104 | 32.829578 | 1.5102843 | 7.1441059 | 0.0387126 | 7.677394 | 0.0181446 | 0.9492678 |

| 13.0 | 99.0000 | 17.756939 | 16.5517004 | 18.962178 | 0.6121206 | -4.7569391 | 0.0063593 | 7.684870 | 0.0012368 | -0.6216993 |

| 32.0 | 107.0000 | 14.634628 | 13.6783551 | 15.590901 | 0.4856751 | 17.3653718 | 0.0040034 | 7.615553 | 0.0103272 | 2.2668493 |

| 34.0 | 92.0000 | 20.488961 | 18.9448765 | 22.033046 | 0.7842148 | 13.5110388 | 0.0104377 | 7.644915 | 0.0165121 | 1.7694352 |

| 7.0 | 117.0000 | 10.731739 | 9.7160668 | 11.747412 | 0.5158432 | -3.7317395 | 0.0045162 | 7.687035 | 0.0005386 | -0.4872611 |

| 27.0 | 103.0000 | 16.195784 | 15.1406420 | 17.250925 | 0.5358888 | 10.8042164 | 0.0048740 | 7.661538 | 0.0048755 | 1.4109828 |

| 2.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | -11.0734727 | 0.0037475 | 7.660109 | 0.0039289 | -1.4453286 |

| 22.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | 13.2197049 | 0.0062133 | 7.647051 | 0.0093300 | 1.7275978 |

| 23.0 | 60.0000 | 32.978205 | 29.4969773 | 36.459433 | 1.7680574 | -9.9782050 | 0.0530552 | 7.664543 | 0.0499906 | -1.3358496 |

| 17.0 | 118.0000 | 10.341451 | 9.2966681 | 11.386233 | 0.5306276 | 6.6585494 | 0.0047788 | 7.679502 | 0.0018153 | 0.8695355 |

| 16.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | 8.0002826 | 0.0071610 | 7.674588 | 0.0039458 | 1.0460042 |

| 7.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | -6.4637615 | 0.0037538 | 7.680146 | 0.0013410 | -0.8436639 |

| 15.0 | 121.0000 | 9.170584 | 8.0198612 | 10.321307 | 0.5844329 | 5.8294160 | 0.0057970 | 7.682059 | 0.0016913 | 0.7616492 |

| 1.0 | 138.0000 | 2.535673 | 0.5024366 | 4.568910 | 1.0326469 | -1.5356732 | 0.0180983 | 7.689889 | 0.0003757 | -0.2018981 |

| 29.0 | 81.0000 | 24.782139 | 22.6102899 | 26.953988 | 1.1030457 | 4.2178612 | 0.0206501 | 7.686006 | 0.0032504 | 0.5552529 |

| 2.0 | 120.0000 | 9.560873 | 8.4482829 | 10.673463 | 0.5650659 | -7.5608728 | 0.0054192 | 7.676312 | 0.0026577 | -0.9876871 |

| 28.0 | 89.0000 | 21.659828 | 19.9524648 | 23.367191 | 0.8671411 | 6.3401722 | 0.0127619 | 7.680447 | 0.0044666 | 0.8312996 |

| 3.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | -5.7802951 | 0.0062133 | 7.682196 | 0.0017838 | -0.7553894 |

| 16.0 | 92.0000 | 20.488961 | 18.9448765 | 22.033046 | 0.7842148 | -4.4889612 | 0.0104377 | 7.685464 | 0.0018227 | -0.5878842 |

| 18.0 | 125.0000 | 7.609428 | 6.2838548 | 8.935002 | 0.6732367 | 10.3905715 | 0.0076926 | 7.663639 | 0.0071575 | 1.3588884 |

| 17.0 | 104.0000 | 15.805495 | 14.7805831 | 16.830406 | 0.5205355 | 1.1945052 | 0.0045987 | 7.690128 | 0.0000562 | 0.1559755 |

| 11.0 | 127.0000 | 6.828851 | 5.4053723 | 8.252329 | 0.7229610 | 4.1711492 | 0.0088708 | 7.686156 | 0.0013333 | 0.5458309 |

| 5.0 | 135.0000 | 3.706540 | 1.8480728 | 5.565007 | 0.9438843 | 1.2934602 | 0.0151207 | 7.690062 | 0.0002213 | 0.1697966 |

| 26.0 | 96.0000 | 18.927806 | 17.5864623 | 20.269149 | 0.6812459 | 7.0721943 | 0.0078767 | 7.678055 | 0.0033964 | 0.9249938 |

| 6.0 | 125.0000 | 7.609428 | 6.2838548 | 8.935002 | 0.6732367 | -1.6094285 | 0.0076926 | 7.689838 | 0.0001717 | -0.2104825 |

| 8.0 | 136.0000 | 3.316251 | 1.4000461 | 5.232456 | 0.9732084 | 4.6837491 | 0.0160748 | 7.684988 | 0.0030911 | 0.6151486 |

| 24.0 | 92.0000 | 20.488961 | 18.9448765 | 22.033046 | 0.7842148 | 3.5110388 | 0.0104377 | 7.687412 | 0.0011151 | 0.4598133 |

| 12.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -2.2443393 | 0.0038818 | 7.689235 | 0.0001672 | -0.2929548 |

| 3.0 | 132.0000 | 4.877406 | 3.1884041 | 6.566409 | 0.8578160 | -1.8774064 | 0.0124889 | 7.689602 | 0.0003831 | -0.2461245 |

| 1.0 | 127.0000 | 6.828851 | 5.4053723 | 8.252329 | 0.7229610 | -5.8288508 | 0.0088708 | 7.682034 | 0.0026036 | -0.7627554 |

| 23.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | 9.5362385 | 0.0037538 | 7.667968 | 0.0029188 | 1.2446902 |

| 24.0 | 107.0000 | 14.634628 | 13.6783551 | 15.590901 | 0.4856751 | 9.3653718 | 0.0040034 | 7.668763 | 0.0030038 | 1.2225414 |

| 4.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | -3.9997174 | 0.0071610 | 7.686511 | 0.0009862 | -0.5229467 |

| 25.0 | 101.0000 | 16.976361 | 15.8514566 | 18.101266 | 0.5713203 | 8.0236386 | 0.0055398 | 7.674521 | 0.0030603 | 1.0482024 |

| 2.0 | 121.0000 | 9.170584 | 8.0198612 | 10.321307 | 0.5844329 | -7.1705840 | 0.0057970 | 7.677734 | 0.0025590 | -0.9368811 |

| 2.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | -6.7802951 | 0.0062133 | 7.679080 | 0.0024543 | -0.8860730 |

| 5.0 | 134.0000 | 4.096829 | 2.2955143 | 5.898143 | 0.9148575 | 0.9031713 | 0.0142050 | 7.690277 | 0.0001012 | 0.1185071 |

| 25.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | 11.5362385 | 0.0037538 | 7.657512 | 0.0042714 | 1.5057344 |

| 9.0 | 106.0000 | 15.024917 | 14.0497190 | 16.000115 | 0.4952868 | -6.0249170 | 0.0041634 | 7.681499 | 0.0012932 | -0.7865467 |

| 4.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | -5.9511617 | 0.0050798 | 7.681709 | 0.0015423 | -0.7772757 |

| 4.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | -5.9511617 | 0.0050798 | 7.681709 | 0.0015423 | -0.7772757 |

| 17.0 | 94.0000 | 19.708384 | 18.2681930 | 21.148574 | 0.7314487 | -2.7083835 | 0.0090804 | 7.688657 | 0.0005756 | -0.3544528 |

| 3.0 | 123.0000 | 8.390006 | 7.1558657 | 9.624147 | 0.6267994 | -5.3900062 | 0.0066679 | 7.683275 | 0.0016660 | -0.7045463 |

| 7.0 | 126.0000 | 7.219140 | 5.8453381 | 8.592941 | 0.6977308 | -0.2191396 | 0.0082625 | 7.690469 | 0.0000034 | -0.0286675 |

| 4.0 | 130.0000 | 5.657984 | 4.0781656 | 7.237803 | 0.8023635 | -1.6579842 | 0.0109264 | 7.689796 | 0.0002605 | -0.2171869 |

| 4.0 | 123.0000 | 8.390006 | 7.1558657 | 9.624147 | 0.6267994 | -4.3900062 | 0.0066679 | 7.685701 | 0.0011052 | -0.5738328 |

| 5.0 | 113.0000 | 12.292895 | 11.3551157 | 13.230674 | 0.4762824 | -7.2928949 | 0.0038500 | 7.677321 | 0.0017511 | -0.9519301 |

| 5.0 | 116.0000 | 11.122028 | 10.1318833 | 12.112173 | 0.5028781 | -6.1220283 | 0.0042920 | 7.681205 | 0.0013769 | -0.7992761 |

| 5.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | -8.0734727 | 0.0037475 | 7.674351 | 0.0020885 | -1.0537635 |

| 4.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | -5.9511617 | 0.0050798 | 7.681709 | 0.0015423 | -0.7772757 |

| 5.0 | 131.0000 | 5.267695 | 3.6337155 | 6.901675 | 0.8298710 | -0.2676953 | 0.0116884 | 7.690463 | 0.0000073 | -0.0350801 |

| 5.0 | 120.0000 | 9.560873 | 8.4482829 | 10.673463 | 0.5650659 | -4.5608728 | 0.0054192 | 7.685328 | 0.0009671 | -0.5957930 |

| 15.0 | 133.0000 | 4.487118 | 2.7423129 | 6.231922 | 0.8861571 | 10.5128825 | 0.0133277 | 7.662845 | 0.0128398 | 1.3788050 |

| 5.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -9.2443393 | 0.0038818 | 7.669324 | 0.0028370 | -1.2066683 |

| 5.0 | 117.0000 | 10.731739 | 9.7160668 | 11.747412 | 0.5158432 | -5.7317395 | 0.0045162 | 7.682349 | 0.0012705 | -0.7484052 |

| 5.0 | 118.0000 | 10.341451 | 9.2966681 | 11.386233 | 0.5306276 | -5.3414506 | 0.0047788 | 7.683417 | 0.0011682 | -0.6975364 |

| 5.0 | 112.0000 | 12.683184 | 11.7540290 | 13.612339 | 0.4719022 | -7.6831838 | 0.0037795 | 7.675874 | 0.0019077 | -1.0028385 |

| 19.0 | 98.0000 | 18.147228 | 16.8984852 | 19.395971 | 0.6342156 | 0.8527720 | 0.0068267 | 7.690300 | 0.0000427 | 0.1114777 |

| 8.0 | 129.0000 | 6.048273 | 4.5216627 | 7.574883 | 0.7753399 | 1.9517270 | 0.0102028 | 7.689533 | 0.0003366 | 0.2555721 |

| 8.0 | 121.0000 | 9.170584 | 8.0198612 | 10.321307 | 0.5844329 | -1.1705840 | 0.0057970 | 7.690141 | 0.0000682 | -0.1529440 |

| 12.0 | 115.0000 | 11.512317 | 10.5438343 | 12.480800 | 0.4918763 | 0.4876828 | 0.0041063 | 7.690422 | 0.0000084 | 0.0636647 |

| 6.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | -7.0734727 | 0.0037475 | 7.678102 | 0.0016031 | -0.9232418 |

| 6.0 | 130.0000 | 5.657984 | 4.0781656 | 7.237803 | 0.8023635 | 0.3420158 | 0.0109264 | 7.690452 | 0.0000111 | 0.0448022 |

| 10.0 | 99.0000 | 17.756939 | 16.5517004 | 18.962178 | 0.6121206 | -7.7569391 | 0.0063593 | 7.675553 | 0.0032888 | -1.0137787 |

| 11.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | 2.2197049 | 0.0062133 | 7.689260 | 0.0002630 | 0.2900789 |

| 20.0 | 106.0000 | 15.024917 | 14.0497190 | 16.000115 | 0.4952868 | 4.9750830 | 0.0041634 | 7.684357 | 0.0008818 | 0.6494920 |

| 11.0 | 120.0000 | 9.560873 | 8.4482829 | 10.673463 | 0.5650659 | 1.4391272 | 0.0054192 | 7.689968 | 0.0000963 | 0.1879951 |

| 7.0 | 115.0000 | 11.512317 | 10.5438343 | 12.480800 | 0.4918763 | -4.5123172 | 0.0041063 | 7.685444 | 0.0007154 | -0.5890615 |

| 7.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | -2.9511617 | 0.0050798 | 7.688325 | 0.0003793 | -0.3854485 |

| 12.0 | 105.0000 | 15.415206 | 14.4170377 | 16.413374 | 0.5069529 | -3.4152059 | 0.0043618 | 7.687595 | 0.0004355 | -0.4458960 |

| 8.0 | 127.0000 | 6.828851 | 5.4053723 | 8.252329 | 0.7229610 | 1.1711492 | 0.0088708 | 7.690140 | 0.0001051 | 0.1532550 |

| 8.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -6.2443393 | 0.0038818 | 7.680835 | 0.0012945 | -0.8150768 |

| 15.0 | 123.0000 | 8.390006 | 7.1558657 | 9.624147 | 0.6267994 | 6.6099938 | 0.0066679 | 7.679641 | 0.0025056 | 0.8640151 |

| 8.0 | 126.0000 | 7.219140 | 5.8453381 | 8.592941 | 0.6977308 | 0.7808604 | 0.0082625 | 7.690329 | 0.0000435 | 0.1021510 |

| 9.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | -0.9511617 | 0.0050798 | 7.690257 | 0.0000394 | -0.1242304 |

| 9.0 | 117.0000 | 10.731739 | 9.7160668 | 11.747412 | 0.5158432 | -1.7317395 | 0.0045162 | 7.689739 | 0.0001160 | -0.2261169 |

| 14.0 | 126.0000 | 7.219140 | 5.8453381 | 8.592941 | 0.6977308 | 6.7808604 | 0.0082625 | 7.679054 | 0.0032779 | 0.8870619 |

| 14.0 | 125.0000 | 7.609428 | 6.2838548 | 8.935002 | 0.6732367 | 6.3905715 | 0.0076926 | 7.680339 | 0.0027075 | 0.8357648 |

| 17.0 | 99.0000 | 17.756939 | 16.5517004 | 18.962178 | 0.6121206 | -0.7569391 | 0.0063593 | 7.690339 | 0.0000313 | -0.0989268 |

| 13.0 | 94.0000 | 19.708384 | 18.2681930 | 21.148574 | 0.7314487 | -6.7083835 | 0.0090804 | 7.679288 | 0.0035316 | -0.8779426 |

| 11.0 | 116.0000 | 11.122028 | 10.1318833 | 12.112173 | 0.5028781 | -0.1220283 | 0.0042920 | 7.690477 | 0.0000005 | -0.0159317 |

| 18.0 | 113.0000 | 12.292895 | 11.3551157 | 13.230674 | 0.4762824 | 5.7071051 | 0.0038500 | 7.682424 | 0.0010724 | 0.7449395 |

| 11.0 | 117.0000 | 10.731739 | 9.7160668 | 11.747412 | 0.5158432 | 0.2682605 | 0.0045162 | 7.690463 | 0.0000028 | 0.0350273 |

| 16.0 | 98.0000 | 18.147228 | 16.8984852 | 19.395971 | 0.6342156 | -2.1472280 | 0.0068267 | 7.689337 | 0.0002708 | -0.2806940 |

| 10.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | -1.9026061 | 0.0039589 | 7.689586 | 0.0001226 | -0.2483578 |

| 16.5 | 97.0000 | 18.537517 | 17.2433404 | 19.831693 | 0.6572906 | -2.0375168 | 0.0073325 | 7.689451 | 0.0002621 | -0.2664200 |

| 12.0 | 109.0000 | 13.854050 | 12.9225573 | 14.785543 | 0.4730898 | -1.8540504 | 0.0037986 | 7.689631 | 0.0001117 | -0.2420000 |

| 15.0 | 98.0000 | 18.147228 | 16.8984852 | 19.395971 | 0.6342156 | -3.1472280 | 0.0068267 | 7.688024 | 0.0005817 | -0.4114179 |

| 11.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | 3.0002826 | 0.0071610 | 7.688248 | 0.0005549 | 0.3922747 |

| 13.0 | 109.0000 | 13.854050 | 12.9225573 | 14.785543 | 0.4730898 | -0.8540504 | 0.0037986 | 7.690300 | 0.0000237 | -0.1114750 |

| 14.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | 2.0973939 | 0.0039589 | 7.689393 | 0.0001490 | 0.2737845 |

| 12.0 | 115.0000 | 11.512317 | 10.5438343 | 12.480800 | 0.4918763 | 0.4876828 | 0.0041063 | 7.690422 | 0.0000084 | 0.0636647 |

| 12.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | 3.2197049 | 0.0062133 | 7.687911 | 0.0005534 | 0.4207624 |

| 13.0 | 85.0000 | 23.220983 | 21.2855757 | 25.156391 | 0.9829612 | -10.2209833 | 0.0163986 | 7.664280 | 0.0150266 | -1.3426122 |

| 42.0 | 89.0000 | 21.659828 | 19.9524648 | 23.367191 | 0.8671411 | 20.3401722 | 0.0127619 | 7.586574 | 0.0459711 | 2.6669272 |

| 21.0 | 83.0000 | 24.001561 | 21.9488035 | 26.054319 | 1.0425613 | -3.0015610 | 0.0184475 | 7.688220 | 0.0014639 | -0.3946916 |

| 27.0 | 117.0000 | 10.731739 | 9.7160668 | 11.747412 | 0.5158432 | 16.2682605 | 0.0045162 | 7.624727 | 0.0102350 | 2.1241809 |

| 29.0 | 131.0000 | 5.267695 | 3.6337155 | 6.901675 | 0.8298710 | 23.7323047 | 0.0116884 | 7.548831 | 0.0571944 | 3.1100005 |

| 21.0 | 100.0000 | 17.366650 | 16.2027698 | 18.530531 | 0.5911154 | 3.6333498 | 0.0059303 | 7.687209 | 0.0006723 | 0.4747514 |

| 7.0 | 127.0000 | 6.828851 | 5.4053723 | 8.252329 | 0.7229610 | 0.1711492 | 0.0088708 | 7.690473 | 0.0000022 | 0.0223964 |

| 15.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | 3.0973939 | 0.0039589 | 7.688108 | 0.0003249 | 0.4043201 |

| 3.0 | 102.0000 | 16.586073 | 15.4975049 | 17.674640 | 0.5528653 | -13.5860725 | 0.0051877 | 7.644650 | 0.0082108 | -1.7745606 |

| 4.0 | 134.0000 | 4.096829 | 2.2955143 | 5.898143 | 0.9148575 | -0.0968287 | 0.0142050 | 7.690478 | 0.0000012 | -0.0127051 |

| 5.0 | 127.0000 | 6.828851 | 5.4053723 | 8.252329 | 0.7229610 | -1.8288508 | 0.0088708 | 7.689650 | 0.0002563 | -0.2393209 |

| 15.0 | 105.0000 | 15.415206 | 14.4170377 | 16.413374 | 0.5069529 | -0.4152059 | 0.0043618 | 7.690438 | 0.0000064 | -0.0542101 |

| 4.0 | 123.0000 | 8.390006 | 7.1558657 | 9.624147 | 0.6267994 | -4.3900062 | 0.0066679 | 7.685701 | 0.0011052 | -0.5738328 |

| 24.0 | 78.0000 | 25.953005 | 23.5998340 | 28.306177 | 1.1951364 | -1.9530054 | 0.0242421 | 7.689518 | 0.0008241 | -0.2575727 |

| 6.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | -1.9997174 | 0.0071610 | 7.689489 | 0.0002465 | -0.2614548 |

| 10.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -4.2443393 | 0.0038818 | 7.686026 | 0.0005980 | -0.5540158 |

| 7.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | -4.9026061 | 0.0039589 | 7.684536 | 0.0008139 | -0.6399645 |

| 4.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | -4.7802951 | 0.0062133 | 7.684816 | 0.0012200 | -0.6247059 |

| 6.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -8.2443393 | 0.0038818 | 7.673658 | 0.0022564 | -1.0761378 |

| 21.0 | 118.0000 | 10.341451 | 9.2966681 | 11.386233 | 0.5306276 | 10.6585494 | 0.0047788 | 7.662317 | 0.0046513 | 1.3918927 |

| 13.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -1.2443393 | 0.0038818 | 7.690098 | 0.0000514 | -0.1624242 |

| 5.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | -2.9997174 | 0.0071610 | 7.688248 | 0.0005547 | -0.3922008 |

| 9.0 | 102.0000 | 16.586073 | 15.4975049 | 17.674640 | 0.5528653 | -7.5860725 | 0.0051877 | 7.676221 | 0.0025599 | -0.9908637 |

| 10.0 | 105.0000 | 15.415206 | 14.4170377 | 16.413374 | 0.5069529 | -5.4152059 | 0.0043618 | 7.683224 | 0.0010950 | -0.7070200 |

| 10.0 | 125.6231 | 7.366226 | 6.0107786 | 8.721674 | 0.6884091 | 2.6337739 | 0.0080432 | 7.688758 | 0.0004812 | 0.3445082 |

| 7.0 | 109.0000 | 13.854050 | 12.9225573 | 14.785543 | 0.4730898 | -6.8540504 | 0.0037986 | 7.678858 | 0.0015259 | -0.8946254 |

| 8.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | 0.0002826 | 0.0071610 | 7.690481 | 0.0000000 | 0.0000370 |

| 9.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | -0.9511617 | 0.0050798 | 7.690257 | 0.0000394 | -0.1242304 |

| 8.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | -5.4637615 | 0.0037538 | 7.683098 | 0.0009581 | -0.7131418 |

| 9.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | 0.2197049 | 0.0062133 | 7.690469 | 0.0000026 | 0.0287118 |

| 12.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | 3.2197049 | 0.0062133 | 7.687911 | 0.0005534 | 0.4207624 |

| 14.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -0.2443393 | 0.0038818 | 7.690466 | 0.0000020 | -0.0318937 |

| 47.0 | 87.0000 | 22.440406 | 20.6202698 | 24.260541 | 0.9244165 | 24.5595945 | 0.0145034 | 7.538244 | 0.0764377 | 3.2230060 |

| 29.0 | 77.0000 | 26.343294 | 23.9290733 | 28.757515 | 1.2261425 | 2.6567058 | 0.0255163 | 7.688697 | 0.0016094 | 0.3506095 |

| 45.0 | 105.0000 | 15.415206 | 14.4170377 | 16.413374 | 0.5069529 | 29.5847941 | 0.0043618 | 7.470849 | 0.0326821 | 3.8626492 |

| 20.0 | 96.0000 | 18.927806 | 17.5864623 | 20.269149 | 0.6812459 | 1.0721943 | 0.0078767 | 7.690195 | 0.0000781 | 0.1402356 |

| 33.0 | 106.0000 | 15.024917 | 14.0497190 | 16.000115 | 0.4952868 | 17.9750830 | 0.0041634 | 7.610158 | 0.0115112 | 2.3466285 |

| 33.0 | 97.0000 | 18.537517 | 17.2433404 | 19.831693 | 0.6572906 | 14.4624832 | 0.0073325 | 7.638413 | 0.0132079 | 1.8910736 |

| 15.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | 1.9265273 | 0.0037475 | 7.689563 | 0.0001189 | 0.2514536 |

| 27.0 | 85.0000 | 23.220983 | 21.2855757 | 25.156391 | 0.9829612 | 3.7790167 | 0.0163986 | 7.686904 | 0.0020541 | 0.4964057 |

| 22.0 | 88.0000 | 22.050117 | 20.2867099 | 23.813523 | 0.8956048 | -0.0501167 | 0.0136134 | 7.690480 | 0.0000003 | -0.0065739 |

| 12.0 | 105.0000 | 15.415206 | 14.4170377 | 16.413374 | 0.5069529 | -3.4152059 | 0.0043618 | 7.687595 | 0.0004355 | -0.4458960 |

| 32.0 | 81.0000 | 24.782139 | 22.6102899 | 26.953988 | 1.1030457 | 7.2178612 | 0.0206501 | 7.677369 | 0.0095185 | 0.9501826 |

| 26.0 | 121.0000 | 9.170584 | 8.0198612 | 10.321307 | 0.5844329 | 16.8294160 | 0.0057970 | 7.620000 | 0.0140960 | 2.1988672 |

| 29.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | 14.7556607 | 0.0038818 | 7.636462 | 0.0072282 | 1.9260640 |

| 23.0 | 106.0000 | 15.024917 | 14.0497190 | 16.000115 | 0.4952868 | 7.9750830 | 0.0041634 | 7.674736 | 0.0022659 | 1.0411389 |

| 29.0 | 92.0000 | 20.488961 | 18.9448765 | 22.033046 | 0.7842148 | 8.5110388 | 0.0104377 | 7.672432 | 0.0065522 | 1.1146243 |

| 29.0 | 112.0000 | 12.683184 | 11.7540290 | 13.612339 | 0.4719022 | 16.3168162 | 0.0037795 | 7.624381 | 0.0086041 | 2.1297331 |

| 14.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | 5.2197049 | 0.0062133 | 7.683726 | 0.0014546 | 0.6821295 |

| 14.0 | 96.0000 | 18.927806 | 17.5864623 | 20.269149 | 0.6812459 | -4.9278057 | 0.0078767 | 7.684451 | 0.0016490 | -0.6445227 |

| 23.0 | 82.0000 | 24.391850 | 22.2797461 | 26.503954 | 1.0727023 | -1.3918499 | 0.0195296 | 7.689994 | 0.0003340 | -0.1831229 |

| 0.0 | 134.0000 | 4.096829 | 2.2955143 | 5.898143 | 0.9148575 | -4.0968287 | 0.0142050 | 7.686287 | 0.0020819 | -0.5375539 |

| 4.0 | 129.0000 | 6.048273 | 4.5216627 | 7.574883 | 0.7753399 | -2.0482730 | 0.0102028 | 7.689437 | 0.0003708 | -0.2682145 |

| 2.0 | 128.0000 | 6.438562 | 4.9641036 | 7.913020 | 0.7488527 | -4.4385619 | 0.0095176 | 7.685581 | 0.0016219 | -0.5810137 |

| 3.0 | 125.0000 | 7.609428 | 6.2838548 | 8.935002 | 0.6732367 | -4.6094285 | 0.0076926 | 7.685206 | 0.0014086 | -0.6028253 |

| 8.0 | 100.0000 | 17.366650 | 16.2027698 | 18.530531 | 0.5911154 | -9.3666502 | 0.0059303 | 7.668715 | 0.0044681 | -1.2238928 |

| 19.0 | 109.0000 | 13.854050 | 12.9225573 | 14.785543 | 0.4730898 | 5.1459496 | 0.0037986 | 7.683932 | 0.0008601 | 0.6716754 |

| 14.0 | 109.0000 | 13.854050 | 12.9225573 | 14.785543 | 0.4730898 | 0.1459496 | 0.0037986 | 7.690475 | 0.0000007 | 0.0190501 |

| 6.0 | 116.0000 | 11.122028 | 10.1318833 | 12.112173 | 0.5028781 | -5.1220283 | 0.0042920 | 7.683989 | 0.0009638 | -0.6687187 |

| 3.0 | 112.0000 | 12.683184 | 11.7540290 | 13.612339 | 0.4719022 | -9.6831838 | 0.0037795 | 7.667267 | 0.0030302 | -1.2638861 |

| 9.0 | 123.0000 | 8.390006 | 7.1558657 | 9.624147 | 0.6267994 | 0.6099938 | 0.0066679 | 7.690388 | 0.0000213 | 0.0797344 |

| 7.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | -6.0734727 | 0.0037475 | 7.681357 | 0.0011819 | -0.7927201 |

| 8.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | -3.9026061 | 0.0039589 | 7.686714 | 0.0005157 | -0.5094289 |

| 20.0 | 103.0000 | 16.195784 | 15.1406420 | 17.250925 | 0.5358888 | 3.8042164 | 0.0048740 | 7.686898 | 0.0006045 | 0.4968138 |

| 5.0 | 115.0000 | 11.512317 | 10.5438343 | 12.480800 | 0.4918763 | -6.5123172 | 0.0041063 | 7.679986 | 0.0014900 | -0.8501519 |

| 7.0 | 105.0000 | 15.415206 | 14.4170377 | 16.413374 | 0.5069529 | -8.4152059 | 0.0043618 | 7.672944 | 0.0026442 | -1.0987059 |

| 7.0 | 103.0000 | 16.195784 | 15.1406420 | 17.250925 | 0.5358888 | -9.1957836 | 0.0048740 | 7.669525 | 0.0035319 | -1.2009286 |

| 10.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | -1.9026061 | 0.0039589 | 7.689586 | 0.0001226 | -0.2483578 |

| 11.0 | 122.0000 | 8.780295 | 7.5889743 | 9.971616 | 0.6050519 | 2.2197049 | 0.0062133 | 7.689260 | 0.0002630 | 0.2900789 |

| 12.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | -1.4637615 | 0.0037538 | 7.689951 | 0.0000688 | -0.1910533 |

| 8.0 | 120.0000 | 9.560873 | 8.4482829 | 10.673463 | 0.5650659 | -1.5608728 | 0.0054192 | 7.689877 | 0.0001133 | -0.2038989 |

| 8.0 | 125.0000 | 7.609428 | 6.2838548 | 8.935002 | 0.6732367 | 0.3905715 | 0.0076926 | 7.690443 | 0.0000101 | 0.0510793 |

| 14.0 | 112.0000 | 12.683184 | 11.7540290 | 13.612339 | 0.4719022 | 1.3168162 | 0.0037795 | 7.690052 | 0.0000560 | 0.1718759 |

| 10.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | -1.9026061 | 0.0039589 | 7.689586 | 0.0001226 | -0.2483578 |

| 13.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | -0.0734727 | 0.0037475 | 7.690479 | 0.0000002 | -0.0095898 |

| 11.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | 1.0488383 | 0.0050798 | 7.690208 | 0.0000479 | 0.1369878 |

| 11.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -3.2443393 | 0.0038818 | 7.687878 | 0.0003494 | -0.4234853 |

| 9.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | -0.9511617 | 0.0050798 | 7.690257 | 0.0000394 | -0.1242304 |

| 15.0 | 121.0000 | 9.170584 | 8.0198612 | 10.321307 | 0.5844329 | 5.8294160 | 0.0057970 | 7.682059 | 0.0016913 | 0.7616492 |

| 11.0 | 118.0000 | 10.341451 | 9.2966681 | 11.386233 | 0.5306276 | 0.6585494 | 0.0047788 | 7.690373 | 0.0000178 | 0.0859995 |

| 13.0 | 119.0000 | 9.951162 | 8.8739776 | 11.028346 | 0.5470838 | 3.0488383 | 0.0050798 | 7.688180 | 0.0004048 | 0.3982059 |

| 44.0 | 97.0000 | 18.537517 | 17.2433404 | 19.831693 | 0.6572906 | 25.4624832 | 0.0073325 | 7.527916 | 0.0409401 | 3.3294027 |

| 28.0 | 110.0000 | 13.463762 | 12.5377737 | 14.389749 | 0.4702938 | 14.5362385 | 0.0037538 | 7.638069 | 0.0067819 | 1.8973008 |

| 4.0 | 111.0000 | 13.073473 | 12.1482682 | 13.998677 | 0.4698959 | -9.0734727 | 0.0037475 | 7.670102 | 0.0026379 | -1.1842852 |

| 3.0 | 136.0000 | 3.316251 | 1.4000461 | 5.232456 | 0.9732084 | -0.3162509 | 0.0160748 | 7.690456 | 0.0000141 | -0.0415354 |

| 15.0 | 101.0000 | 16.976361 | 15.8514566 | 18.101266 | 0.5713203 | -1.9763614 | 0.0055398 | 7.689513 | 0.0001857 | -0.2581904 |

| 2.0 | 113.0000 | 12.292895 | 11.3551157 | 13.230674 | 0.4762824 | -10.2928949 | 0.0038500 | 7.664244 | 0.0034881 | -1.3435154 |

| 11.0 | 102.0000 | 16.586073 | 15.4975049 | 17.674640 | 0.5528653 | -5.5860725 | 0.0051877 | 7.682752 | 0.0013881 | -0.7296313 |

| 4.0 | 118.0000 | 10.341451 | 9.2966681 | 11.386233 | 0.5306276 | -6.3414506 | 0.0047788 | 7.680523 | 0.0016465 | -0.8281257 |

| 4.0 | 130.0000 | 5.657984 | 4.0781656 | 7.237803 | 0.8023635 | -1.6579842 | 0.0109264 | 7.689796 | 0.0002605 | -0.2171869 |

| 21.0 | 103.0000 | 16.195784 | 15.1406420 | 17.250925 | 0.5358888 | 4.8042164 | 0.0048740 | 7.684767 | 0.0009640 | 0.6274094 |

| 10.0 | 128.0000 | 6.438562 | 4.9641036 | 7.913020 | 0.7488527 | 3.5614381 | 0.0095176 | 7.687326 | 0.0010442 | 0.4661970 |

| 5.0 | 129.0000 | 6.048273 | 4.5216627 | 7.574883 | 0.7753399 | -1.0482730 | 0.0102028 | 7.690207 | 0.0000971 | -0.1372678 |

| 23.0 | 91.0000 | 20.879250 | 19.2816314 | 22.476869 | 0.8114038 | 2.1207499 | 0.0111740 | 7.689361 | 0.0004362 | 0.2778414 |

| 5.0 | 128.0000 | 6.438562 | 4.9641036 | 7.913020 | 0.7488527 | -1.4385619 | 0.0095176 | 7.689966 | 0.0001704 | -0.1883097 |

| 6.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | -8.2443393 | 0.0038818 | 7.673658 | 0.0022564 | -1.0761378 |

| 15.0 | 108.0000 | 14.244339 | 13.3027019 | 15.185977 | 0.4782419 | 0.7556607 | 0.0038818 | 7.690340 | 0.0000190 | 0.0986368 |

| 8.0 | 107.0000 | 14.634628 | 13.6783551 | 15.590901 | 0.4856751 | -6.6346282 | 0.0040034 | 7.679589 | 0.0015075 | -0.8660743 |

| 9.0 | 121.0000 | 9.170584 | 8.0198612 | 10.321307 | 0.5844329 | -0.1705840 | 0.0057970 | 7.690474 | 0.0000014 | -0.0222879 |

| 6.0 | 121.0000 | 9.170584 | 8.0198612 | 10.321307 | 0.5844329 | -3.1705840 | 0.0057970 | 7.687990 | 0.0005003 | -0.4142564 |

| 7.0 | 124.0000 | 7.999717 | 6.7207582 | 9.278676 | 0.6495620 | -0.9997174 | 0.0071610 | 7.690233 | 0.0000616 | -0.1307089 |

| 15.0 | 115.0000 | 11.512317 | 10.5438343 | 12.480800 | 0.4918763 | 3.4876828 | 0.0041063 | 7.687472 | 0.0004274 | 0.4553003 |

| 8.0 | 114.0000 | 11.902606 | 10.9516557 | 12.853556 | 0.4829718 | -3.9026061 | 0.0039589 | 7.686714 | 0.0005157 | -0.5094289 |

| 10.0 | 115.0000 | 11.512317 | 10.5438343 | 12.480800 | 0.4918763 | -1.5123172 | 0.0041063 | 7.689915 | 0.0000804 | -0.1974258 |

Regression Model

How useful are each of the individual predictors for my model?

- Use the coefficients and t-tests of the slopes

Is my overall model (i.e., the regression equation) useful at predicting the outcome variable?

- Use the model summary, F-test, and \(R^2\)

Overall Model Significance

Our overall model uses an F-test

However, we can think about the hypotheses for the overall test being:

\(H_0\): We cannot predict the dependent variable (over and above a model with only an intercept)

\(H_1\): We can predict the dependent variable ( over and above a model with only an intercept)

Generally, this form does not include two tailed tests because the math is squared, so it is impossible to get negative values in the statistical test

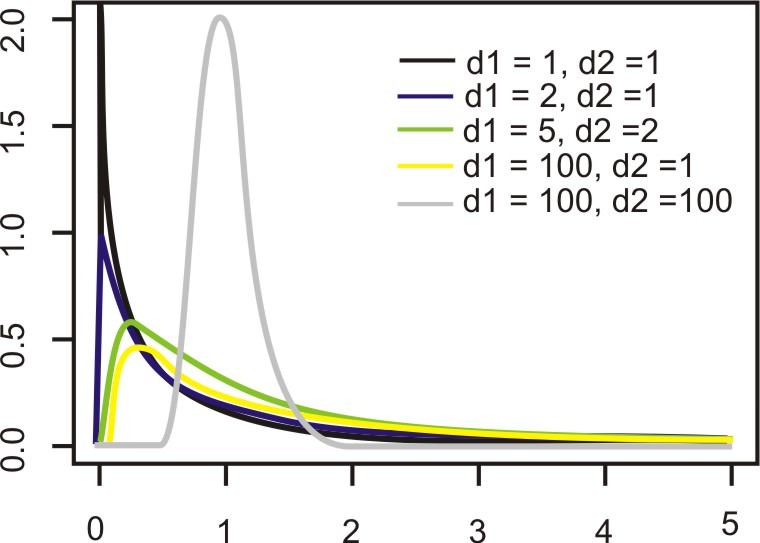

F-distribution

F-Statistic, Explained Over Unexplained

- F-statistics use measures of variance, which are sums of squares divided by relevant degrees of freedom

\[F = \frac{SS_{Explained}/df1 (p-1)}{SS_{Unexplained}/df2(n-p)} = \frac{MS_{Explained}}{MS_{Unexplained}}\]

MS explainedrepresents the reduction in error achieved by the modelMS unexplainedtells us how much variation is left over from the complex modelIf explained = unexplained, then F=1

If explained > then, F >1

If explained < unexplained, F < 1

Calculating Mean Squares in R

F test

Analysis of Variance Table (Type III SS)

Model: CESD_total ~ PIL_total

SS df MS F PRE p

----- --------------- | --------- --- -------- ------- ----- -----

Model (error reduced) | 7929.743 1 7929.743 134.584 .3368 .0000

Error (from model) | 15613.882 265 58.920

----- --------------- | --------- --- -------- ------- ----- -----

Total (empty model) | 23543.625 266 88.510 F(1, 265) = 134.584, p < .001.

- Because it is significant the model is said to have explanatory power

Effect Size: \(R^2\)

Called PRE in book (not common)

Coefficient of determination

\[R^2 = 1 - \frac{SS_{\text{error}}}{SS_{\text{tot}}}\] \[R^2 = 1 - \frac{SS_{unexplained}}{SS_{Total}} = \frac{SS_{explained}}{SS_{Total}}\]

Standardized effect size

Amount of variance explained

- \(R^2\) of .4 means 40% of variance in the outcome variable (\(Y\)) can be explained by the predictor (\(X\))

Range: 0-1

\(R^2\)

| r.squared | adj.r.squared | sigma | statistic | p.value | df | logLik | AIC | BIC | deviance | df.residual | nobs |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.3368106 | 0.334308 | 7.675957 | 134.5842 | 0 | 1 | -922.0236 | 1850.047 | 1860.809 | 15613.88 | 265 | 267 |

- In our example we get \(R^2\) of .34

- 34% of variance in depressions scores is explained by meaning in life

\(R^2_{adj}\)

\[R^2_{adj}\]

\[R^2_{adj} = 1 - \frac{SS_{unexplained}}{SS_{Total}} = \frac{SS_{explained}(n-K)}{SS_{Total}(n-1)}\] where:

n = Sample size

K = # of predictors

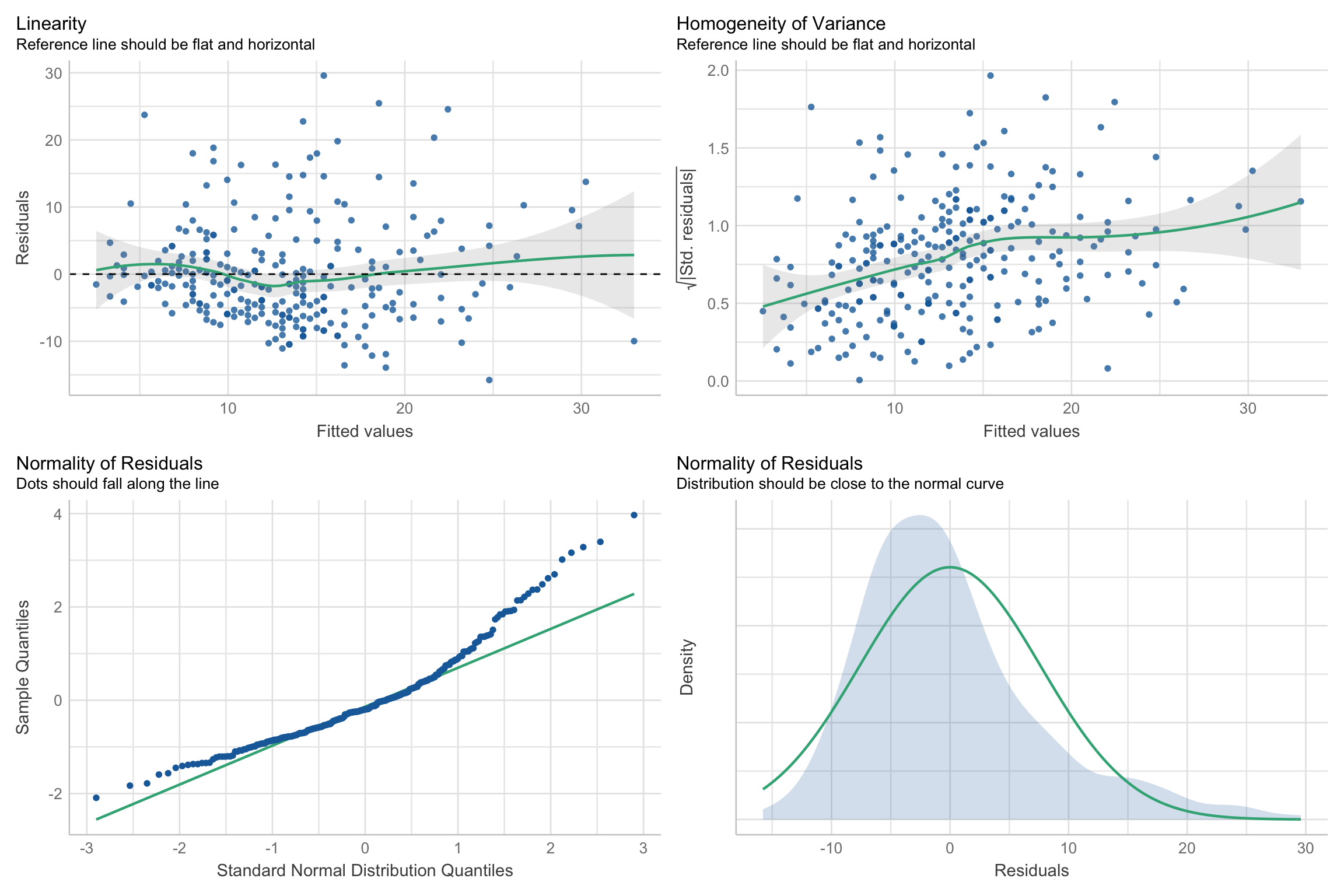

Model conditions

Assumptions

Linearity: There is a linear relationship between the response and predictor variable

Constant Variance: The variability of the errors is equal for all values of the predictor variable

Normality: The errors follow a normal distribution.

Independence: The errors are independent from each other.

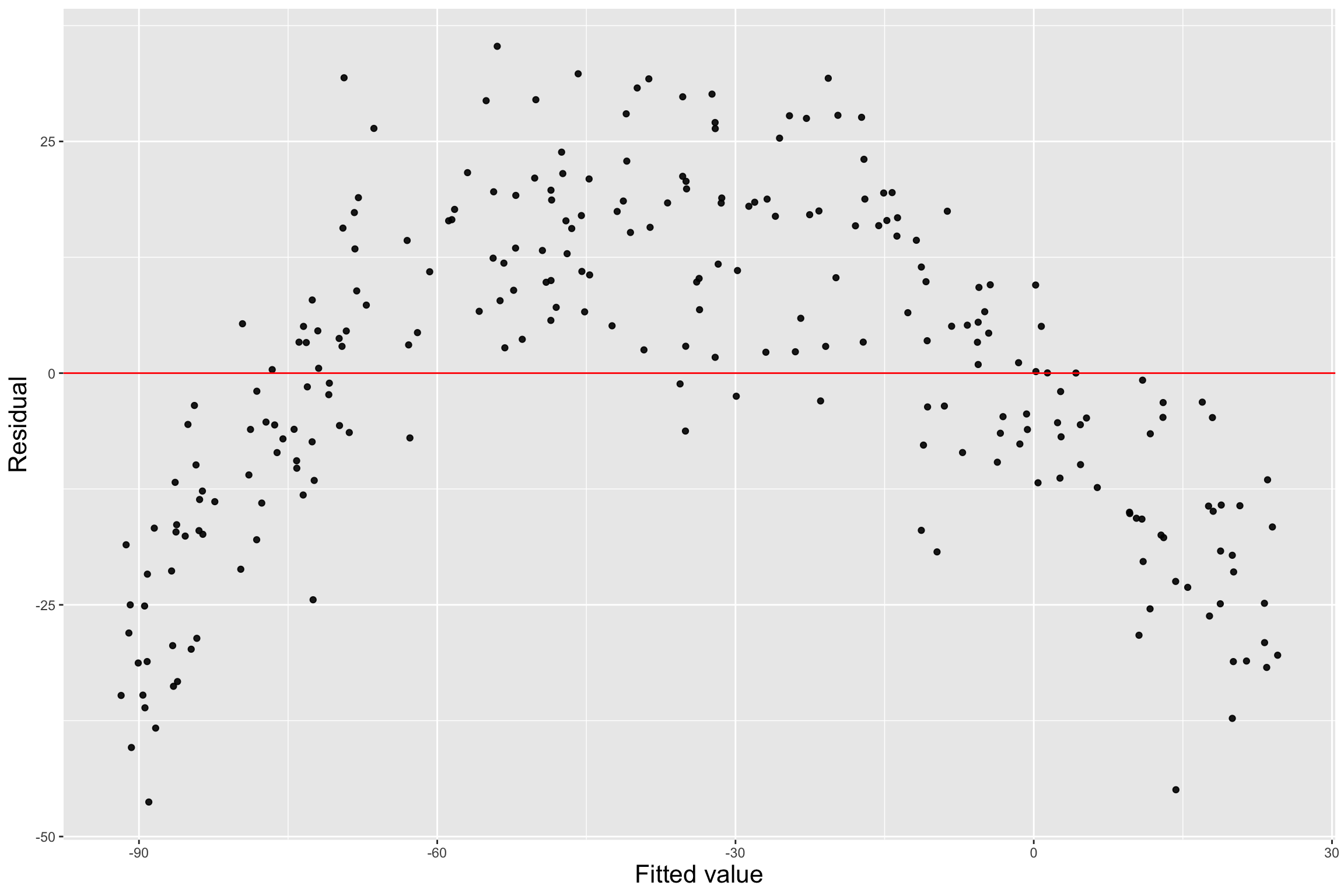

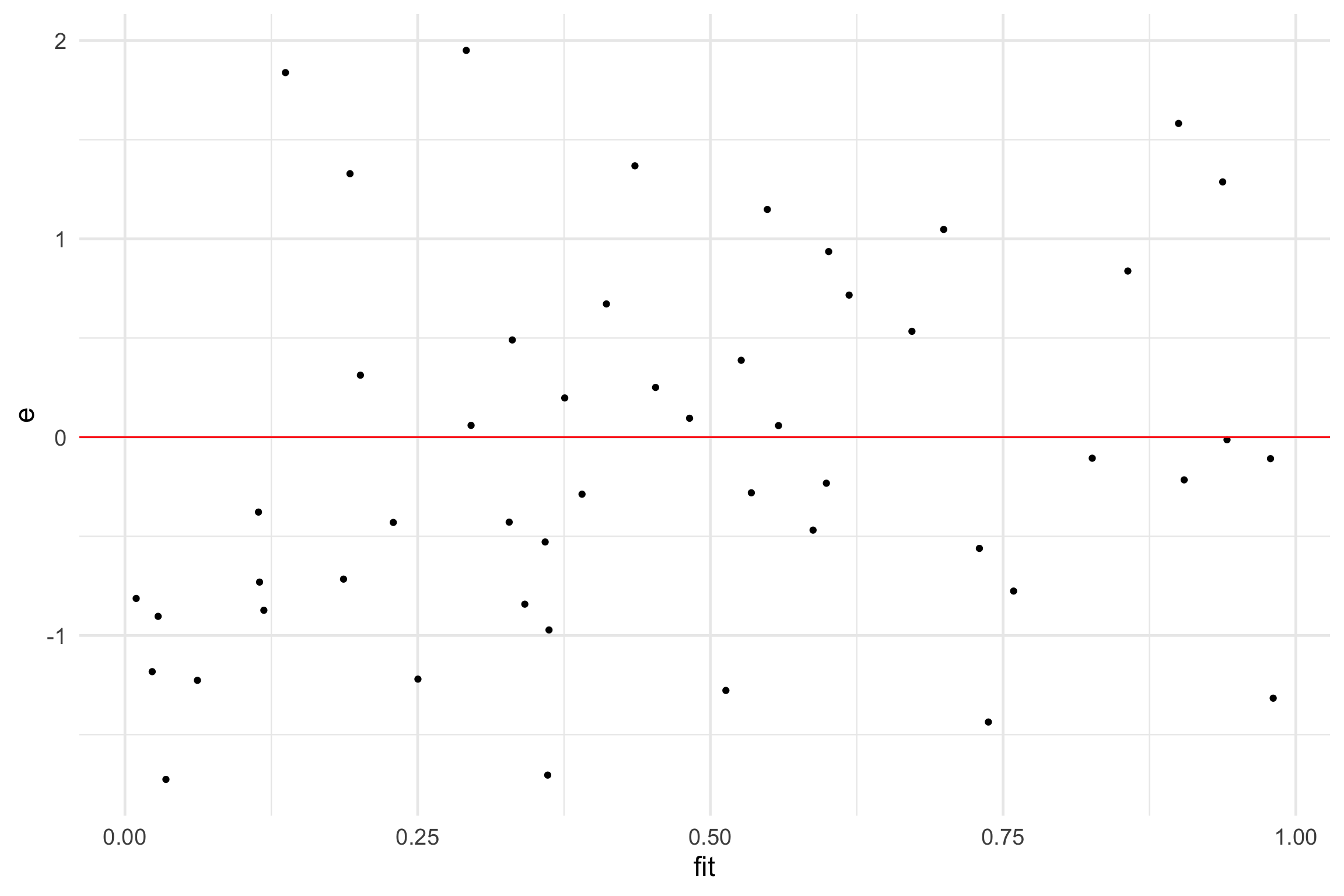

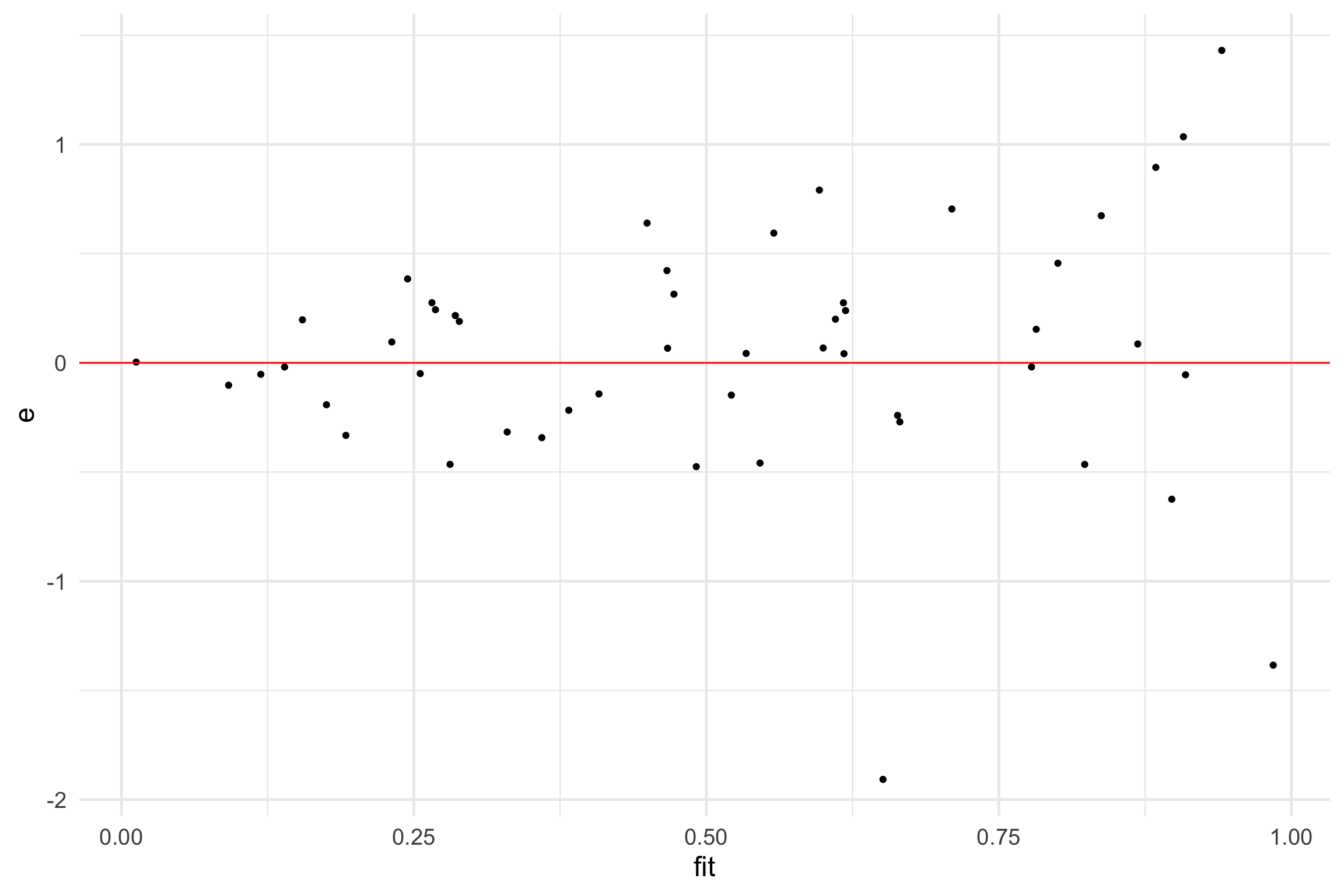

Assumptions: Linearity

- Can use a scatter plot between two variables but common to use residuals versus fits plot

❌ Violation: Nonlinear pattern

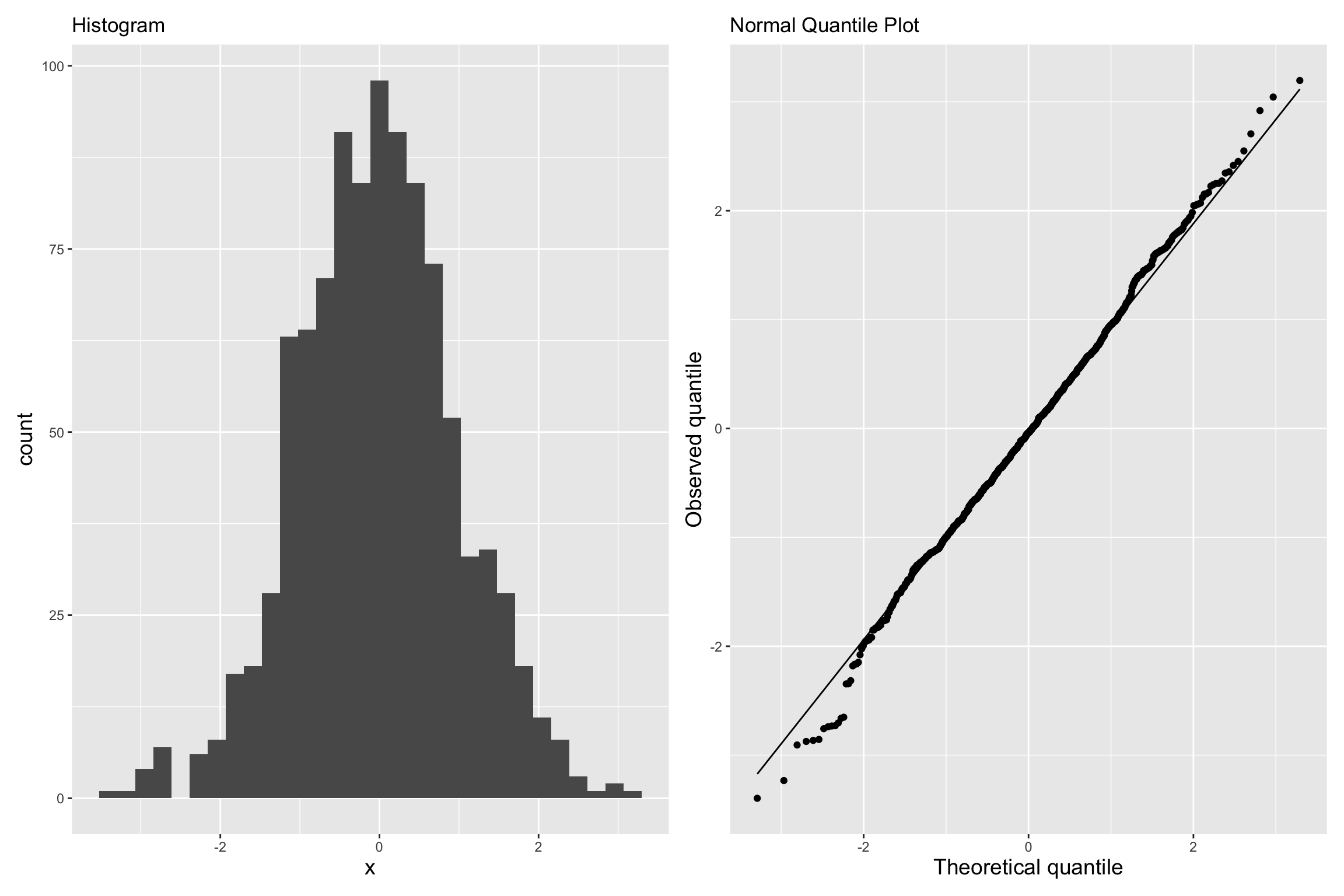

Assumptions: Normality of Errors

✅:Points fall along a straight diagonal line on the normal quantile plot.

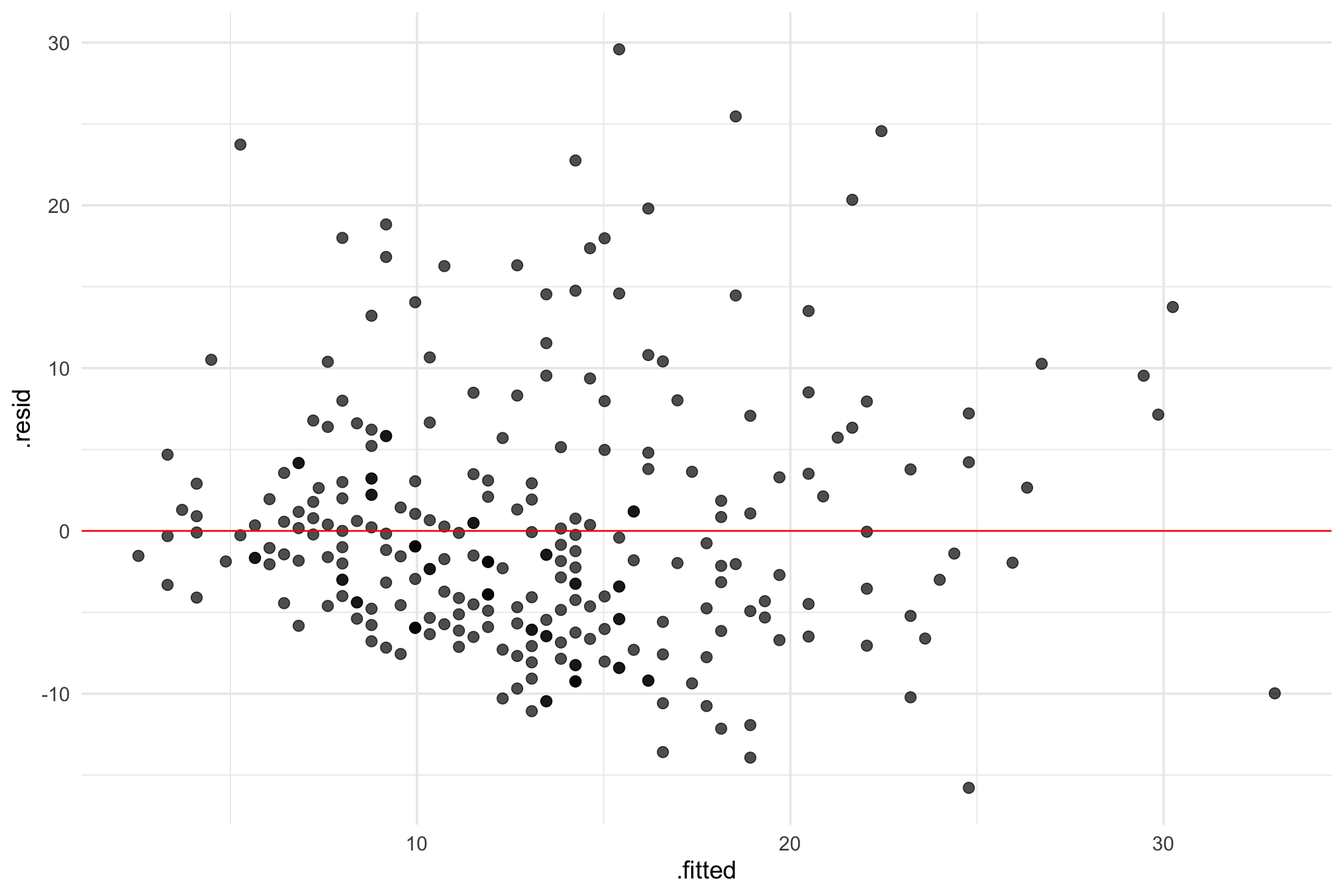

Assumptions: Equal Variances

- Constant error

- No correlation between predictor and residuals

- What are we looking for?

- Random variation above and below 0

- No patterns

- Width of the band of points is constant

Assumptions: Equal Variances

- Good

✅ There is no distinguishable pattern or structure. The residuals are randomly scattered.

Assumptions: Equal Variances

- Bad

✅ There is a distinguishable pattern or structure.

Assumption: Independence

- Let’s pretend this one is met 😀

- We can often check the independence assumption based on the context of the data and how the observations were collected.

easystats: Performance

easystats: Performance

- Check model assumptions with tests

Assumption violations: What can be done?

Non-linearity?

Non-linear regression

- Polynomials (e.g., \(x^2\))

Non-normality

- Transformations (e.g., log)

Heteroskedasticity

- Use robust SEs

Reporting

reportfunction ineasystatsis greatest thing ever!

We fitted a linear model (estimated using OLS) to predict CESD_total with

PIL_total (formula: CESD_total ~ PIL_total). The model explains a statistically

significant and substantial proportion of variance (R2 = 0.34, F(1, 265) =

134.58, p < .001, adj. R2 = 0.33). The model's intercept, corresponding to

PIL_total = 0, is at 56.40 (95% CI [49.01, 63.78], t(265) = 15.03, p < .001).

Within this model:

- The effect of PIL total is statistically significant and negative (beta =

-0.39, 95% CI [-0.46, -0.32], t(265) = -11.60, p < .001; Std. beta = -0.58, 95%

CI [-0.68, -0.48])

Standardized parameters were obtained by fitting the model on a standardized

version of the dataset. 95% Confidence Intervals (CIs) and p-values were

computed using a Wald t-distribution approximation.PSY 503: Foundations of Statistics in Psychology